2025-11-02

After months of agentic coding frenzy, Twitter is still full of discussions about MCP servers. I first did some very light benchmarking to see whether the bash tools or the MCP server were better suited for a specific task. TL;DR: Both can be efficient if you’re careful.

Unfortunately, many of the most popular MCP servers are incapable of performing any specific task. They need to cover all the bases, which means they provide a large number of tools with lengthy descriptions, consuming important context.

It is also difficult to expand an existing MCP server. You can check the source and modify it, but then you have to work with your agent to understand the codebase.

MCP servers are also not composable. The results returned by the MCP server have to be passed through the context of the agent to be persisted to disk or combined with other results.

I am a simple guy, so I like simple things. Agents can run Bash and write code well. Bash and code are composable. So what’s easier than having your agent order the CLI tool and write the code? This is not a new thing. We all have been doing this since the beginning. I would just like to assure you that in many situations, you do not need or even want an MCP server.

Let me explain this with a common MCP server use case: browser dev tools.

My Browser DevTools Use Cases

My use cases are working together with my agent on the web frontend, or abusing my agent to become a little hacker guy so I can scrape all the data in the world. For these two use cases, I only need a minimal set of tools:

- Start the browser, optionally with my default profile so I’m logged in

- Navigate to a URL, either in the active tab or in a new tab

- Execute JavaScript in active page context

- Take a screenshot of the viewport

And if my use case requires additional specialized tooling, I want my agent to quickly build it for me and combine it with other tools.

Problems with common browser DevTools for your agent

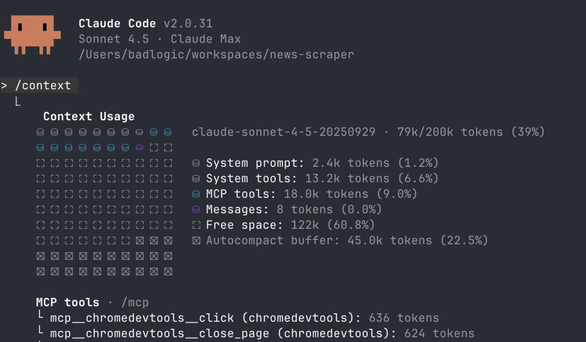

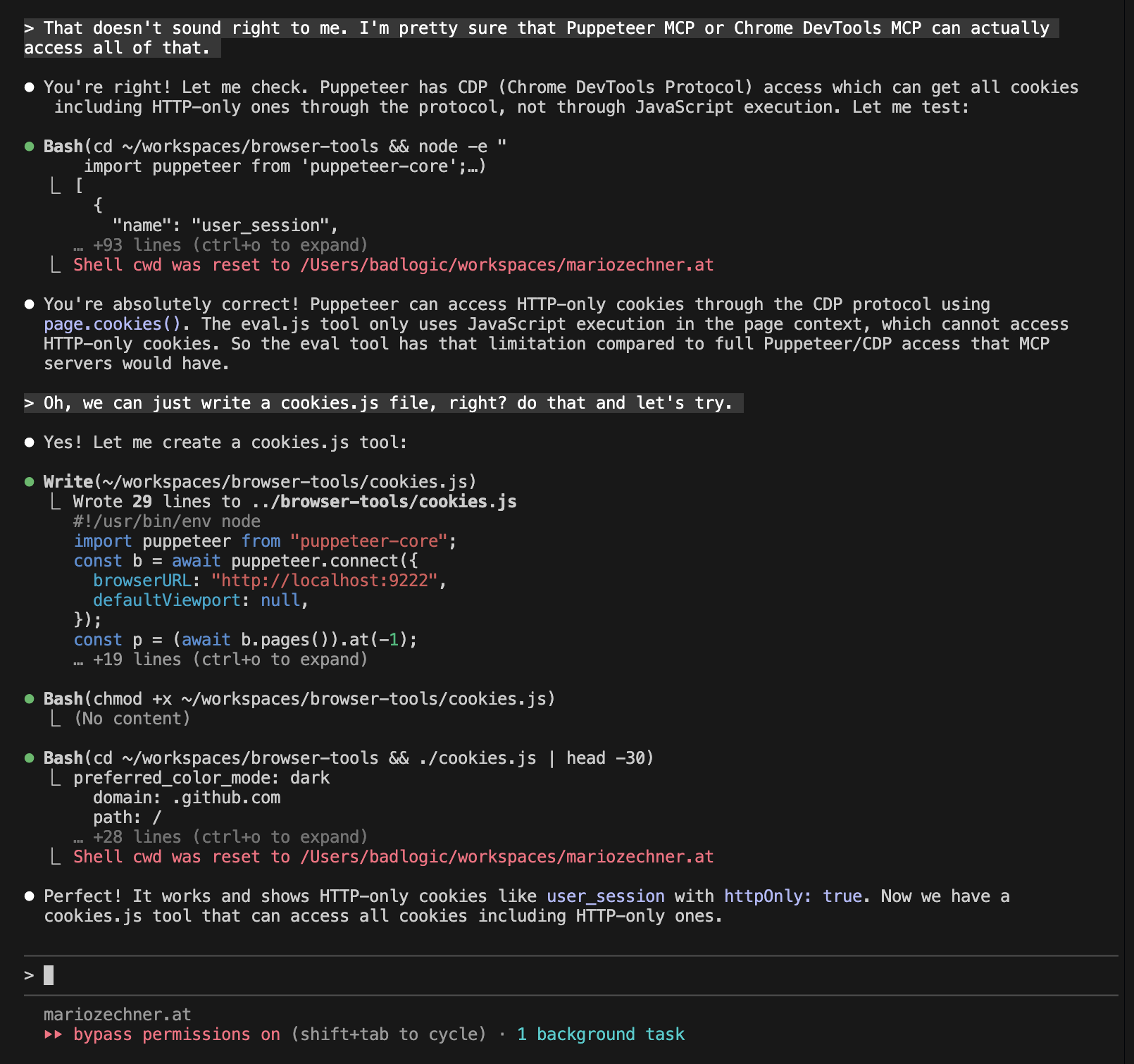

People would recommend Playwright MCP or Chrome DevTools MCP for the use cases I mentioned above. Both are fine, but they need to cover all the bases. Playmaker MCP has 21 devices using 13.7k tokens (6.8% of the cloud’s context). Chrome DevTools MCP has 26 tools using 18.0k tokens (9.0%). So many tools will confuse your agent, especially when combined with other MCP servers and built-in tools.

Using those tools also means you suffer from the composability problem: any output must pass through a reference to your agent. You can fix this by using sub-agents, but then you end up with all the problems that come with sub-agents.

Embracing Bash (and Code)

Here is my minimal set of tools, represented via README.md:

# Browser Tools

Minimal CDP tools for collaborative site exploration.

## Start Chrome

\`\`\`bash

./start.js # Fresh profile

./start.js --profile # Copy your profile (cookies, logins)

\`\`\`

Start Chrome on `:9222` with remote debugging.

## Navigate

\`\`\`bash

./nav.js https://example.com

./nav.js https://example.com --new

\`\`\`

Navigate current tab or open new tab.

## Evaluate JavaScript

\`\`\`bash

./eval.js 'document.title'

./eval.js 'document.querySelectorAll("a").length'

\`\`\`

Execute JavaScript in active tab (async context).

## Screenshot

\`\`\`bash

./screenshot.js

\`\`\`

Screenshot current viewport, returns temp file path.

I feed all this to my agent. It’s a handful of tools that cover all the bases for my use case. Each tool is a simple Node.js script that uses the Puppeteer core. By reading that README, the agent knows the tools available, when to use them and how to use them through bash.

When I start a session where the agent needs to interact with the browser, I simply tell it to read that file in its entirety and that’s all it needs to be effective. Let’s walk through their implementation to see how little code it really is.

start tool

The agent must be able to start a new browser session. For scraping tasks, I often want to use my real Chrome profile so I’m logged in everywhere. This script either rsyncs my Chrome profile to a temp folder (Chrome doesn’t allow debugging on the default profile), or starts fresh:

#!/usr/bin/env node

import from "node:child_process";

import puppeteer from "puppeteer-core";

const useProfile = process.argv[2] === "--profile";

if (process.argv[2] && process.argv[2] !== "--profile")

try null,

class: el.className catch {}

await new Promise((r) => setTimeout(r, 1000));

execSync("mkdir -p ~/.cache/scraping", { stdio: "ignore" });

if (useProfile) {

execSync(

'rsync -a --delete "/Users/badlogic/Library/Application Support/Google/Chrome/" ~/.cache/scraping/',

{ stdio: "pipe" },

);

}

spawn(

"/Applications/Google Chrome.app/Contents/MacOS/Google Chrome",

["--remote-debugging-port=9222", `--user-data-dir=${process.env["HOME"]}/.cache/scraping`],

{ detached: true, stdio: "ignore" },

).unref();

let connected = false;

for (let i = 0; i < 30; i++) {

try {

const browser = await puppeteer.connect({

browserURL: "http://localhost:9222",

defaultViewport: null,

});

await browser.disconnect();

connected = true;

break;

} catch {

await new Promise((r) => setTimeout(r, 500));

}

}

if (!connected) {

console.error("✗ Failed to connect to Chrome");

process.exit(1);

}

console.log(`✓ Chrome started on :9222${useProfile ? " with your profile" : ""}`);

The agent just needs to know whether to use bash to run the start.js script --profile Or without.

navigate tool

Once the browser is running, the agent must navigate to the URL, either in a new tab or in the active tab. This is exactly what the Navigate tool provides:

#!/usr/bin/env node

import puppeteer from "puppeteer-core";

const url = process.argv[2];

const newTab = process.argv[3] === "--new";

if (!url) {

console.log("Usage: nav.js [--new]" );

console.log("\nExamples:");

console.log(" nav.js https://example.com # Navigate current tab");

console.log(" nav.js https://example.com --new # Open in new tab");

process.exit(1);

}

const b = await puppeteer.connect({

browserURL: "http://localhost:9222",

defaultViewport: null,

});

if (newTab) {

const p = await b.newPage();

await p.goto(url, { waitUntil: "domcontentloaded" });

console.log("✓ Opened:", url);

} else {

const p = (await b.pages()).at(-1);

await p.goto(url, { waitUntil: "domcontentloaded" });

console.log("✓ Navigated to:", url);

}

await b.disconnect();

Evaluate JavaScript Tools

The agent needs to execute JavaScript to read and modify the active tab’s DOM. The JavaScript it writes runs in the context of the page, so it doesn’t need to mess with Puppet. All he needs to know is how to write code using the DOM API, and he definitely knows how to do this:

#!/usr/bin/env node

import puppeteer from "puppeteer-core";

const code = process.argv.slice(2).join(" ");

if (!code) {

console.log("Usage: eval.js 'code'");

console.log("\nExamples:");

console.log(' eval.js "document.title"');

console.log(' eval.js "document.querySelectorAll(\'a\').length"');

process.exit(1);

}

const b = await puppeteer.connect({

browserURL: "http://localhost:9222",

defaultViewport: null,

});

const p = (await b.pages()).at(-1);

if (!p) {

console.error("✗ No active tab found");

process.exit(1);

}

const result = await p.evaluate((c) => {

const AsyncFunction = (async () => {}).constructor;

return new AsyncFunction(`return (${c})`)();

}, code);

if (Array.isArray(result)) {

for (let i = 0; i < result.length; i++) {

if (i > 0) console.log("");

for (const [key, value] of Object.entries(result[i])) {

console.log(`${key}: ${value}`);

}

}

} else if (typeof result === "object" && result !== null) {

for (const [key, value] of Object.entries(result)) {

console.log(`${key}: ${value}`);

}

} else {

console.log(result);

}

await b.disconnect();

screenshot tool

Sometimes agents want a visual impression of a page, so naturally we want a screenshot tool:

#!/usr/bin/env node

import { tmpdir } from "node:os";

import { join } from "node:path";

import puppeteer from "puppeteer-core";

const b = await puppeteer.connect({

browserURL: "http://localhost:9222",

defaultViewport: null,

});

const p = (await b.pages()).at(-1);

if (!p) {

console.error("✗ No active tab found");

process.exit(1);

}

const timestamp = new Date().toISOString().replace(/[:.]/g, "-");

const filename = `screenshot-${timestamp}.png`;

const filepath = join(tmpdir(), filename);

await p.screenshot({ path: filepath });

console.log(filepath);

await b.disconnect();

This will take a screenshot of the active tab’s current viewport, write it to a .png file in a temporary directory, and output the file path to the agent, who can then rotate it and read it and use its vision capabilities to “see” the image.

Benefit

So how does it compare to the MCP servers I mentioned above? Well, for starters, I can pull up the README whenever I need it and not have to pay for it every session. This is similar to Anthropic’s recently introduced skill capabilities. Except that it’s even more ad-hoc and works with any coding agent. I just have to instruct my agent to read the README file.

Side note: Many people, including me, have used this kind of setup before Anthropic released their skill system. You can see something similar in my “Prompts Are Code” blog post or my little sitegeist.ai. Armin has also touched on the power of Bash and code compared to MCP before. Anthropic’s skills add progressive disclosure (love it) and they make them available to non-technical audiences in almost all of their products (love it too).

Speaking of README, instead of pulling 13,000 to 18,000 tokens like the MCP servers mentioned above, this README has 225 tokens. This efficiency comes from the fact that models know how to write code and use Bash. I am preserving the reference space by relying heavily on their existing knowledge.

These simple tools are also composable. Instead of reading the output of an invocation in context, the agent may decide to save them to a file for later processing, either manually or by code. The agent can easily chain multiple invocations into a single bash command.

If I feel that a tool’s output token is not efficient, I can change the output format. This is something that is difficult or impossible to do depending on the MCP server you use.

And it’s ridiculously easy to add a new tool or modify an existing tool for my needs. Let me explain.

adding pick tool

When the agent and I try to come up with a scraping method for a specific site, it is often more efficient if I am able to point to DOM elements by clicking directly on them. To make this super easy, I can just create a picker. Here’s what I add to the README:

## Pick Elements

\`\`\`bash

./pick.js "Click the submit button"

\`\`\`

Interactive element picker. Click to select, Cmd/Ctrl+Click for multi-select, Enter to finish.

And here is the code:

#!/usr/bin/env node

import puppeteer from "puppeteer-core";

const message = process.argv.slice(2).join(" ");

if (!message) {

console.log("Usage: pick.js 'message'");

console.log("\nExample:");

console.log(' pick.js "Click the submit button"');

process.exit(1);

}

const b = await puppeteer.connect({

browserURL: "http://localhost:9222",

defaultViewport: null,

});

const p = (await b.pages()).at(-1);

if (!p) {

console.error("✗ No active tab found");

process.exit(1);

}

await p.evaluate(() => {

if (!window.pick) {

window.pick = async (message) => {

if (!message) {

throw new Error("pick() requires a message parameter");

}

return new Promise((resolve) => {

const selections = [];

const selectedElements = new Set();

const overlay = document.createElement("div");

overlay.style.cssText =

"position:fixed;top:0;left:0;width:100%;height:100%;z-index:2147483647;pointer-events:none";

const highlight = document.createElement("div");

highlight.style.cssText =

"position:absolute;border:2px solid #3b82f6;background:rgba(59,130,246,0.1);transition:all 0.1s";

overlay.appendChild(highlight);

const banner = document.createElement("div");

banner.style.cssText =

"position:fixed;bottom:20px;left:50%;transform:translateX(-50%);background:#1f2937;color:white;padding:12px 24px;border-radius:8px;font:14px sans-serif;box-shadow:0 4px 12px rgba(0,0,0,0.3);pointer-events:auto;z-index:2147483647";

const updateBanner = () => {

banner.textContent = `${message} (${selections.length} selected, Cmd/Ctrl+click to add, Enter to finish, ESC to cancel)`;

};

updateBanner();

document.body.append(banner, overlay);

const cleanup = () => {

document.removeEventListener("mousemove", onMove, true);

document.removeEventListener("click", onClick, true);

document.removeEventListener("keydown", onKey, true);

overlay.remove();

banner.remove();

selectedElements.forEach((el) => {

el.style.outline = "";

});

};

const onMove = (e) => {

const el = document.elementFromPoint(e.clientX, e.clientY);

if (!el || overlay.contains(el) || banner.contains(el)) return;

const r = el.getBoundingClientRect();

highlight.style.cssText = `position:absolute;border:2px solid #3b82f6;background:rgba(59,130,246,0.1);top:${r.top}px;left:${r.left}px;width:${r.width}px;height:${r.height}px`;

};

const buildElementInfo = (el) => {

const parents = [];

let current = el.parentElement;

while (current && current !== document.body) {

const parentInfo = current.tagName.toLowerCase();

const id = current.id ? `#${current.id}` : "";

const cls = current.className

? `.${current.className.trim().split(/\s+/).join(".")}`

: "";

parents.push(parentInfo + id + cls);

current = current.parentElement;

}

return {

tag: el.tagName.toLowerCase(),

id: el.id || null,

class: el.className || null,

text: el.textContent?.trim().slice(0, 200) || null,

html: el.outerHTML.slice(0, 500),

parents: parents.join(" > "),

};

};

const onClick = (e) => {

if (banner.contains(e.target)) return;

e.preventDefault();

e.stopPropagation();

const el = document.elementFromPoint(e.clientX, e.clientY);

if (!el || overlay.contains(el) || banner.contains(el)) return;

if (e.metaKey || e.ctrlKey) {

if (!selectedElements.has(el)) {

selectedElements.add(el);

el.style.outline = "3px solid #10b981";

selections.push(buildElementInfo(el));

updateBanner();

}

} else {

cleanup();

const info = buildElementInfo(el);

resolve(selections.length > 0 ? selections : info);

}

};

const onKey = (e) => {

if (e.key === "Escape") {

e.preventDefault();

cleanup();

resolve(null);

} else if (e.key === "Enter" && selections.length > 0) {

e.preventDefault();

cleanup();

resolve(selections);

}

};

document.addEventListener("mousemove", onMove, true);

document.addEventListener("click", onClick, true);

document.addEventListener("keydown", onKey, true);

});

};

}

});

const result = await p.evaluate((msg) => window.pick(msg), message);

if (Array.isArray(result)) {

for (let i = 0; i < result.length; i++) {

if (i > 0) console.log("");

for (const [key, value] of Object.entries(result[i])) {

console.log(`${key}: ${value}`);

}

}

} else if (typeof result === "object" && result !== null) {

for (const [key, value] of Object.entries(result)) {

console.log(`${key}: ${value}`);

}

} else {

console.log(result);

}

await b.disconnect();

Whenever I feel it’s faster for me to click on a group of DOM elements instead of having the agent explore the DOM structure, I can just tell it to use the pick tool. It is extremely efficient and allows me to create scrapers in no time. It’s also great for adjusting the scraper if a site’s DOM layout has changed.

If you’re having trouble understanding what this tool does, don’t worry, I’ll have a video at the end of the blog post where you can see it in action. Before we look into that, let me show you an additional tool.

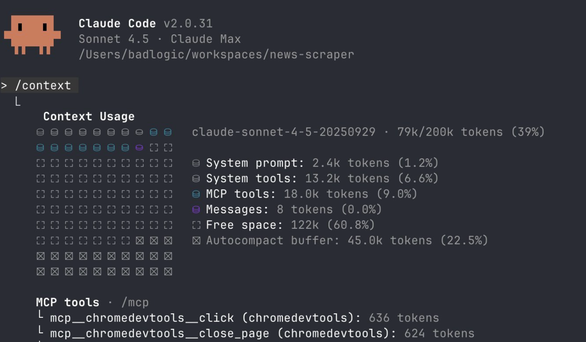

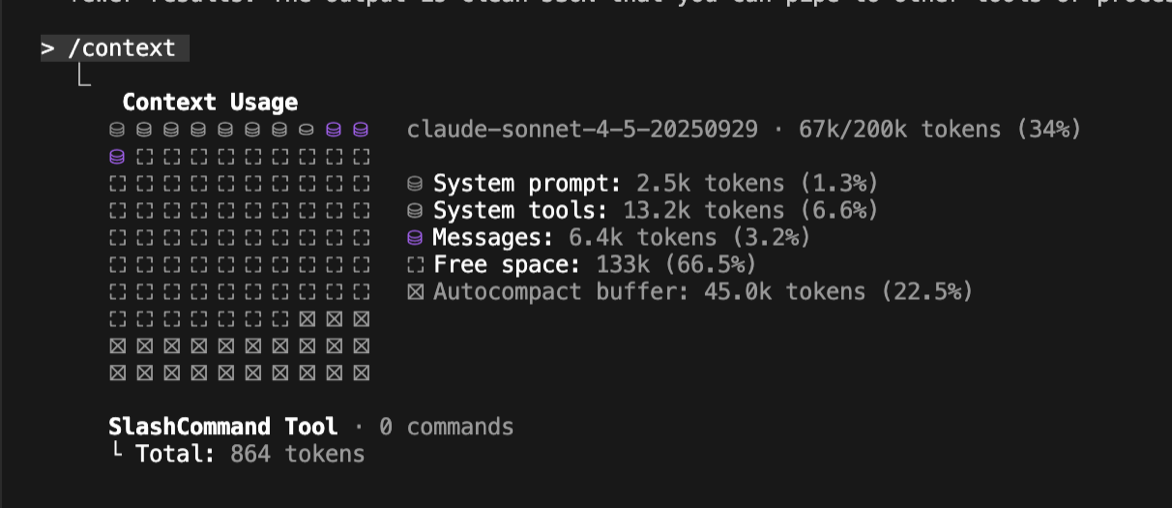

Adding Cookies Tool

During one of my recent scraping adventures, I needed that site’s HTTP-only cookies so that the deterministic scraper could pretend it was me. The evaluation JavaScript tool cannot handle this because it executes in the page context. But it didn’t take me a minute to instruct Cloud to build that tool, add it to the readme, and away we went.

This is much easier than adjusting, testing, and debugging an existing MCP server.

a hypothetical example

I would like to explain the use of this set of tools with a hypothetical example. I decided to create a simple hacker news scraper, where I basically choose DOM elements for the agent based on which it can write a minimal Node.js scraper. Here’s what it looks like in action. I increased the speed of some sections where Claude was normally slow.

Real-world scraping tasks will look a little more involved. Plus, it doesn’t make sense to do this for a simple site like Hacker News. But you get the idea.

Last token matching:

Making it reusable by all agents

Here’s how I’ve set things up so I can use it with Cloud Code and other agents. i have a folder agent-tools In my home directory. I then clone the individual tools’ repositories, like the browser tools repository above, into that folder. Then I set up an alias:

alias cl="PATH=$PATH:/Users/badlogic/agent-tools/browser-tools: && claude --dangerously-skip-permissions"

This way all scripts are available to the cloud’s sessions, but do not pollute my normal environment. I also prefix each script with the full tool name, e.g. browser-tools-start.jsTo eliminate name conflicts. I also add a sentence to the readme telling the agent that all scripts are available globally. This way, the agent doesn’t need to change its working directory just to call a tool script, a few tokens here and there are saved, and the agent is less likely to get confused by constant working directory changes.

Finally, I add the agent tools directory as a working directory via cloud code /add-dirso i can use @README.md To reference the README file of a specific tool and bring it into the context of the agent. I prefer this over Anthropic’s skill auto-discovery, which I found doesn’t work reliably in practice. This also means I save a few more tokens: the cloud code injects all the frontmatter of all those skills into the system prompt (or the first user message, I forgot, see https://cchistory.mariozechner.at)

in conclusion

These tools are extremely easy to build, give you all the freedom you need, and make you, your agent, and your token usage efficient. You can find the browser tool on GitHub.

This general principle can apply to any type of harness that has some type of code execution environment. Think outside the MCP box and you will find that it is far more powerful than the more rigid structure that is followed with MCP.

However with great power also comes great responsibility. You have to create a structure for how to build and maintain those tools yourself. Anthropic’s skill system could be one way to do this, although it is less transferable to other agents. Or you follow my setup above.

This page respects your privacy by not using cookies or similar technologies and not collecting any personally identifiable information.