These two versions of Fast Mode are very different. Anthropic offers up to 2.5x tokens per second (so about 170, more than Opus 4.6’s 65). OpenAI provides over 1000 tokens per second (more than the 65 tokens per second of the GPT-5.3 codec, i.e. 15x). So OpenAI’s fast mode is six times faster than Anthropic.

However, the big advantage of Anthropic is that they are serving their actual models. When you use their fast mode, you get the actual Opus 4.6, while when you use OpenAI’s fast mode you get gpt-5.3-codecs-spark, not the actual gpt-5.3-codecs. Spark is actually very fast, but has a notably less capable model: good enough for many tasks, but it gets confused and messes up tool calls in a way that vanilla GPT-5.3-codecs never will.

Why the differences? AI Labs isn’t providing details on how their fast modes work, but I’m pretty sure it goes something like this: Anthropic’s fast mode is supported low-batch-size estimation, while OpenAI’s fast mode is supported by special Monster Cerebrus chips. Let me unpack that a little.

How Anthropic’s Fast Mode works

Tradeoffs are at the heart of AI inference economics batchingbecause the main hurdle is Memory. GPUs are very fast, but moving data to the GPU is not fast. Each guess operation requires copying all tokens of the user’s prompt. On the GPU before inference begins. Batching multiple users thus increases overall throughput at the expense of making users wait until the batch completes.

A good analogy is a bus system. If you had zero batching for passengers – if, whenever someone boarded a bus, the bus departed immediately – travel would be much faster. For those who managed to get on the bus. But obviously overall throughput will be very low, as people will wait for hours at bus stops until they actually manage to get on the bus.

Anthropic’s Fast Mode offering is basically a bus pass that guarantees the bus will leave as soon as you board. It’s six times the cost, because you’re effectively paying for all the other people who could have gotten on the bus with you, but it’s far faster. because you spend Zero Time to wait for the bus to leave.

Obviously I can’t be completely sure this is correct. Maybe they have access to some new ultra-fast compute that they’re running it on, or they’re doing some algorithmic trick that no one else has thought of. But I’m pretty sure that’s the point. Brand new compute or algorithmic tricks will require changes to the model (see below for OpenAI’s system), and “six times more expensive for 2.5x faster” is about right for the kind of improvement you would expect when switching to a lower-batch-size arrangement.

How OpenAI’s fast mode works

OpenAI’s fast mode doesn’t do anything like that. You can only tell this because they are introducing a new, worse model for it. There would be no reason to do this if they were only changing the batch size. Plus, they told us in the announcement blog post what’s actually supporting their fast mode: Cerebras.

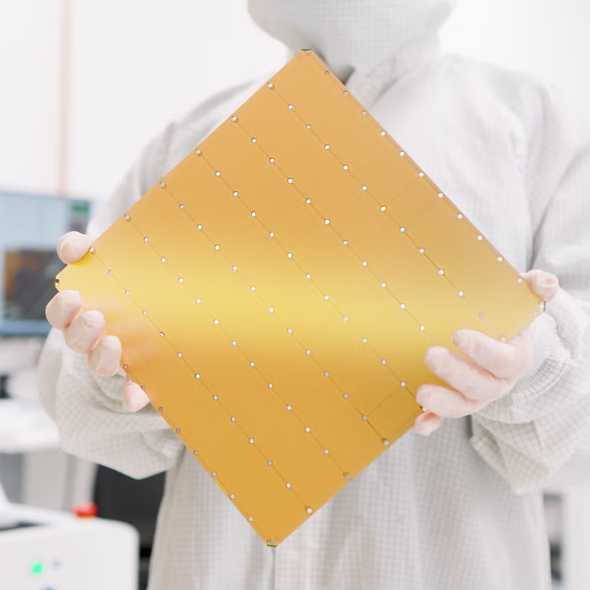

OpenAI had announced its Cerebras partnership a month earlier in January. What is cerebrus? They create “ultra low-latency compute”. In practice this means that they build giant chips. An H100 chip (pretty close to the range of guess chips) is just over a square inch in size. have a cerebrus chip 70 square inch.

You can see in the pictures that the Cerebras chip has a grid-and-hole pattern around it. This is because such large silicon wafers must be broken into dozens of chips. Instead, Cerebras digs a giant chip on the whole thing.

The larger the chip, the more internal memory it can hold. The idea is to have a chip containing SRAM large enough to fit the entire modelTherefore the estimate may be entirely in memory. GPU SRAM is usually measured in tens megabyte. This means that a lot of inference time is spent streaming parts of the model weights from outside the SRAM to the GPU computation.. If you could stream all that (very fast) from SRAM, the guess is there would be a huge speedup: fifteen times faster, as it turns out!

So how much internal memory does the latest Cerebra chip have? 44 GB. This puts OpenAI in an awkward position. 44GB is enough to fit a small model (~20B parameters on fp16, ~40B parameters on int8 quantization), but obviously not enough to fit the GPT-5.3-codecs. This is why they’re introducing a brand new model, and why the Spark model has a bit of a “small model smell”: it’s a smaller distillate of the much larger GPT-5.3-codecs model..

OpenAI’s version is far more technically impressive

It’s interesting that the two major labs have two very different approaches to rapid AI inference. If I had to speculate on a conspiracy theory, it would go something like this:

- OpenAI partnered with Cerebras in mid-January, apparently to work on putting OpenAI models on a faster Cerebras chip

- Anthropic doesn’t have any similar games available, but they know OpenAI will be announcing some sort of bombshell announcement in February, and they want to put something in the news cycle to compete with that.

- So Anthropic struggles to put together that kind of quick estimate can do Provide: Simply reducing the batch size on their existing estimation stack

- Anthropic (presumably) waited until a few days before OpenAI announced its more complex Cerebras implementation, so it looks like OpenAI copied theirs.

Obviously OpenAI’s achievement here is more impressive technically. Running models on Cerebras chips is not a trivial matter, as they are very strange. Training a 20b or 40b ultimate distillate of the GPT-5.3-codecs, which is still quite good, is not trivial. But I applaud Anthropic for finding a sneaky way to get ahead of the announcement that would be largely opaque to non-technical people. This reminds me of OpenAI’s secret introduction of the Respawn API in mid-2025 to help them hide their logic tokens.

Is fast AI inference the next big thing?

Seeing two major labs introduce this feature might make you think that faster AI inference is the new major goal they’re pursuing. I don’t think so. If my above theory is correct, Anthropic doesn’t care He Regarding faster inference, they didn’t want to be seen behind OpenAI. And OpenAI is primarily exploring the capabilities of its new Cerebra partnership. It’s still largely an open question about what kinds of models can fit on these giant chips, how useful those models will be, and whether the economics will make sense.

I personally don’t find “faster, less-efficient estimation” particularly useful. I’ve been messing with it in Codex and I don’t like it. The usefulness of AI agents reigns supreme how few mistakes they makeNot with his raw speed. Buying 6x speed at the cost of 20% more mistakes is a bad deal, because most of the user’s time is spent handling mistakes instead of waiting for the model..

However, it is certainly possible that faster, less-efficient inference becomes the main lower-level primitive in AI systems. Cloud Code already uses Haiku for some tasks. Maybe OpenAI will eventually use Spark in a similar way.

Please consider if you liked this post being subscribed To be emailed updates about my new posts, or Sharing this on Hacker News. Here’s a preview of a related post that shares the tag with it.

<a href