The startup, which is taking its activities public for the first time today, is called Hyperlabs. Its 17-person team (only eight of them full-time) is split between Paris and San Francisco, and the company is run by Zoox co-founder Tim Cantley-Clay, an autonomous vehicle company veteran who abruptly exited the Amazon-owned firm in 2018. Hypr has taken a relatively small amount of funding, $5.5 million through 2022, but its ambitions are broad. Ultimately, it plans to build and operate its own robots. “Think of the love child of R2-D2 and Sonic the Hedgehog,” says Kentley-Clay. “It’s going to define a new category that doesn’t currently exist.”

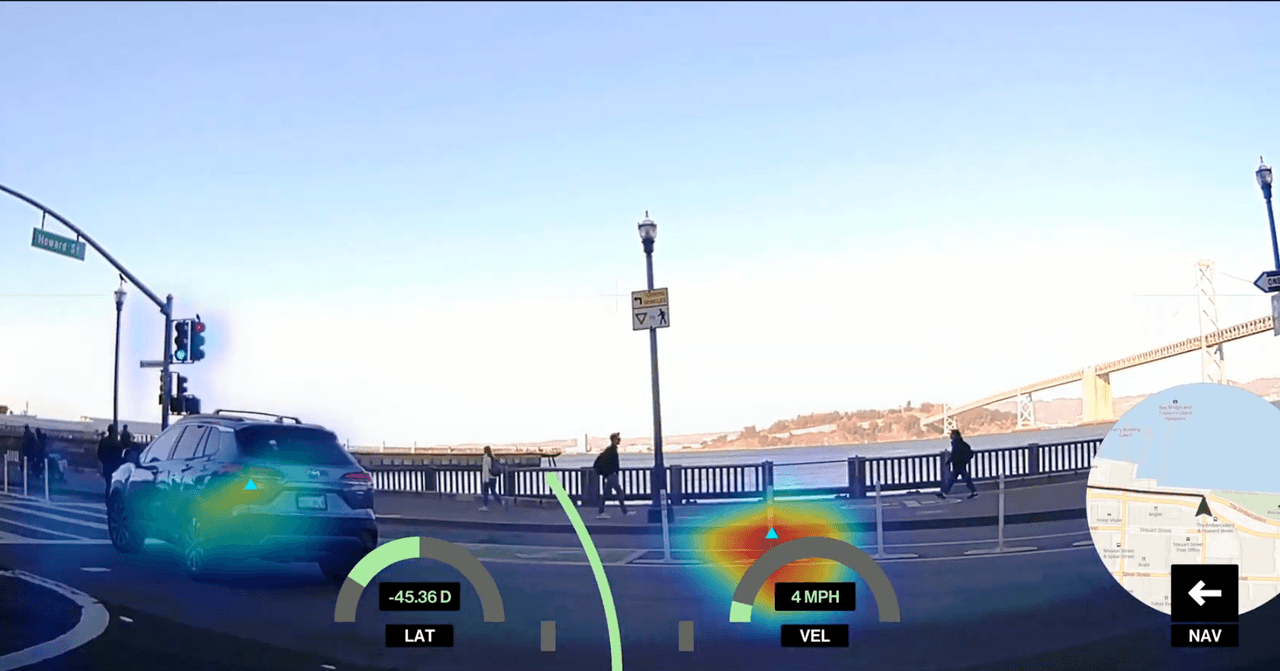

However, for now, the startup is announcing its own software product called Hyperdrive, which it bills as a leap forward in how engineers train vehicles to drive themselves. These types of leaps are everywhere in the field of robotics, thanks to advances in machine learning that promise to reduce the cost of training autonomous vehicle software and the amount of human labor involved. This training development has brought new movement to a space that had been suffering from a “trough of disillusionment” for years as tech manufacturers failed to meet their deadlines for operating robots in public spaces. Now, robotaxis carry paying passengers in more and more cities, and automakers make ambitious new promises about bringing self-driving to customers’ personal cars.

But using a small, agile and inexpensive team to go from “driving very well” to “driving more safely than a human” has its own tall hurdle. “I can’t hand on my heart tell you that this will work,” says Cantley-Clay. “But what we’ve created is a really solid signal. It just needs to be scaled up.”

Old techniques, new tricks

Hyperlabs’ software training technique differs from other robotics startups’ approach of teaching people to run their systems themselves.

First, some background: For years, the big battle in autonomous vehicles was between those who just used cameras to train their software — Tesla! – and which also relied on other sensors – Waymo, Cruise! – which once included expensive lidar and radar. But beneath the surface, major philosophical differences emerged.

Camera-only purveyors like Tesla wanted to save money while planning to launch a massive fleet of robots; For a decade, CEO Elon Musk has been planning to suddenly turn all of his customers’ cars into self-driving cars through software updates. The advantage was that these companies had a lot of data, because their self-driving cars so far collected images of wherever they drove. This information is fed into an “end-to-end” machine learning model through reinforcement. The system takes images-a bike-and spits out the driving command-Move the steering wheel to the left and accelerate quickly to avoid colliding“It’s like training a dog,” says Philip Koopman, an autonomous vehicle software and safety researcher at Carnegie Mellon University, “In the end, you say, ‘bad dog,’ or ‘good dog,'”

<a href