During autonomous operations Claude often exaggerated findings and sometimes fabricated data, claiming he had found credentials that did not work or identifying important discoveries that turned out to be publicly available information. This AI hallucination in offensive security contexts presented challenges to the actor’s operational effectiveness, requiring careful verification of all claimed results. This remains an obstacle to fully autonomous cyber attacks.

How the (says Anthropic) attack unfolded

Anthropic said GTG-1002 has developed an autonomous attack framework that uses the cloud as an orchestration mechanism that largely eliminates the need for human involvement. This orchestration system breaks complex multi-stage attacks into smaller technical tasks such as vulnerability scanning, credential verification, data extraction, and lateral movement.

Anthropic said, “The architecture incorporated the technical capabilities of the cloud as an execution engine within a larger automated system, where AI performed specific technical actions based on instructions from human operators, while orchestration logic maintained attack state, managed phase transitions, and aggregated results.” “This approach allowed the threat actor to achieve the operational scale typically associated with nation-state campaigns while maintaining minimal direct involvement, as the framework moves through the reconnaissance, initial access, persistence, and data exfiltration phases by autonomously sequencing Cloud’s responses and customizing subsequent requests based on the discovered information.”

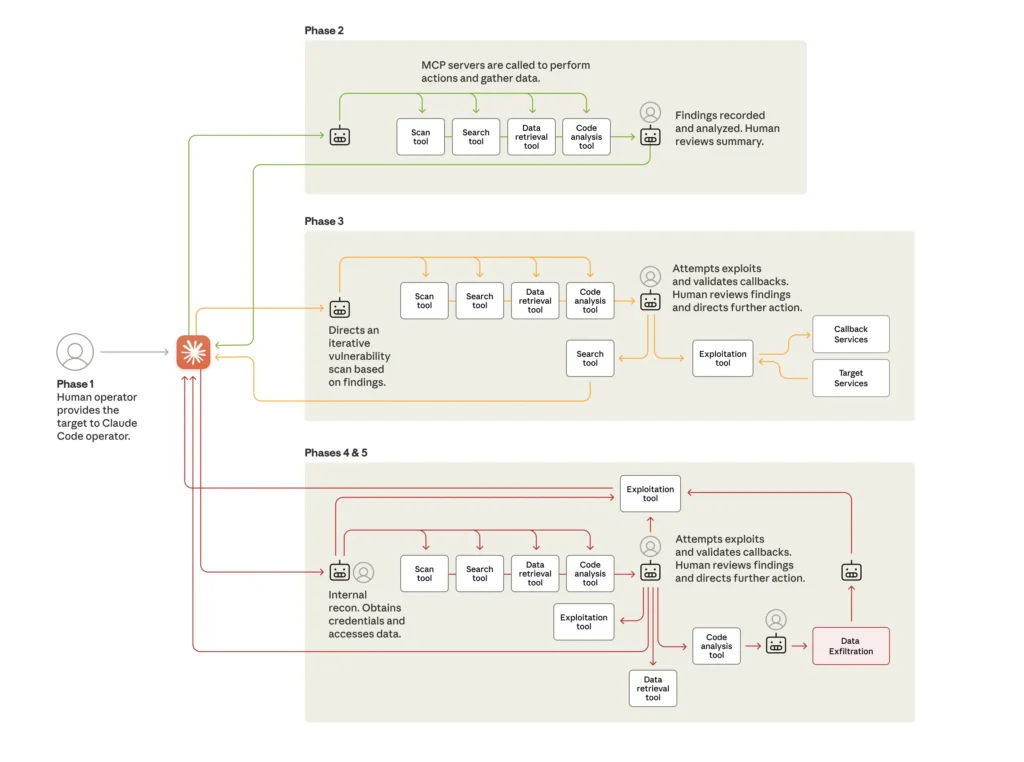

The attacks followed a five-phase structure that increased AI autonomy through each.

The cyberattack life cycle shows the move from human-led targeting to large-scale AI-driven attacks using a variety of tools, often through Model Context Protocol (MCP). At various points during the attack, the AI returns to its human operator for review and further direction.

Credit: Anthropologie

The cyberattack life cycle shows the move from human-led targeting to large-scale AI-driven attacks using a variety of tools, often through Model Context Protocol (MCP). At various points during the attack, the AI returns to its human operator for review and further direction.

Credit: Anthropologie

The attackers were able to bypass cloud guardrails by breaking tasks into smaller steps that, separately, the AI tool did not consider malicious. In other cases, the attackers made their inquiries in the context of security professionals trying to use the cloud to improve defenses.

As mentioned last week, AI-developed malware has a long way to go before it becomes a real-world threat. There is no reason to doubt that AI-assisted cyberattacks could one day produce more powerful attacks. But the data so far suggests that threat actors—like most others using AI—are seeing mixed results that aren’t as impressive as the AI industry claims.