A few months after launching Qwen3-VL, Alibaba has released a detailed technical report on the open multimodal model. Data shows the system excels at image-based math tasks and can analyze hours of video footage.

The system handles massive data loads, processing two hours of video or hundreds of document pages within a 256,000-token context window.

In “needle-in-a-haystack” tests, the flagship 235-billion-parameter model located individual frames in a 30-minute video with 100 percent accuracy. Even with a two-hour video containing approximately one million tokens, the accuracy remained at 99.5 percent. The test works by inserting semantically important “needle” frames at random locations in the long video, which the system must find and analyze.

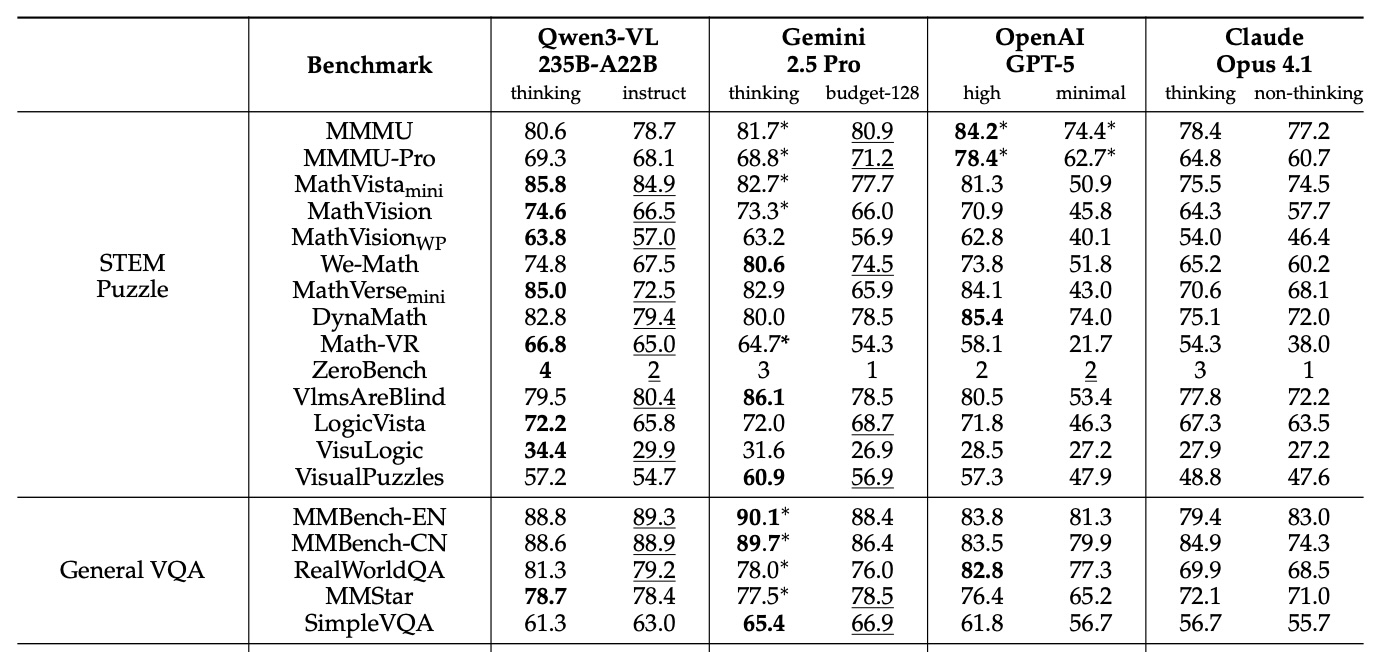

In published benchmarks, the Qwen3-VL-235B-A22B model often outperforms the Gemini 2.5 Pro, OpenAI GPT-5, and Cloud Opus 4.1 – even when competitors use logic features or have a higher-minded budget. The model view dominates on math tasks, scoring 85.8 percent on MathVista compared to 81.3 percent on GPT-5. On MathVision, it scores 74.6 percent, ahead of Gemini 2.5 Pro (73.3 percent) and GPT-5 (65.8 percent).

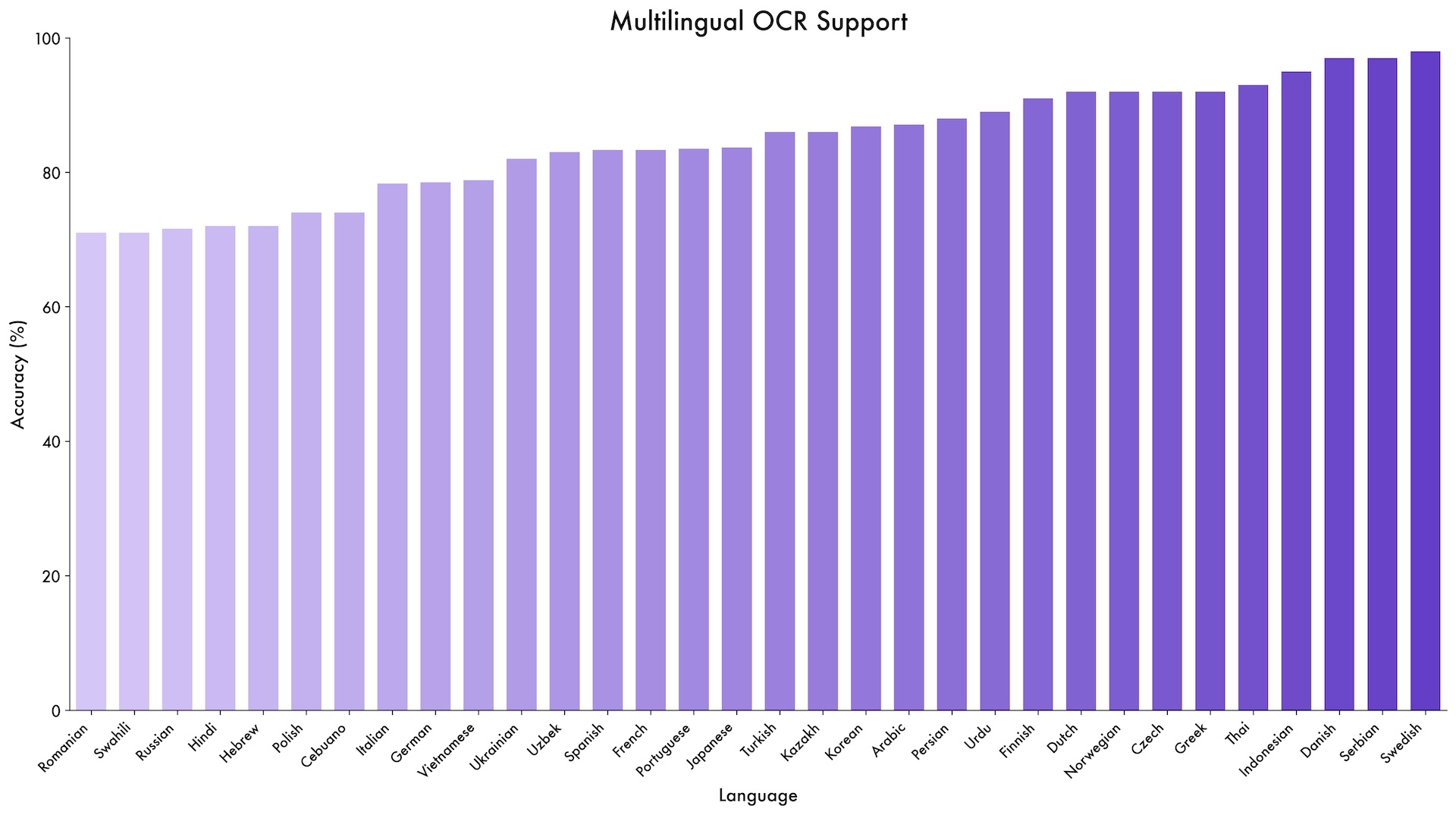

The model also shows range in specific benchmarks. It scored 96.5 percent on the DocVQA document comprehension test and 875 on OCRBench, supporting 39 languages – almost four times higher than its predecessor.

Alibaba claims the system demonstrates new capabilities in GUI agent functions. It achieved 61.8 percent accuracy on ScreenSpot Pro, which tests navigation in graphical user interfaces. At AndroidWorld, where the system must operate Android apps independently, the Qwen3-VL-32B reached 63.7 percent.

The model also handles complex, multi-page PDF documents. It scored 56.2 percent on MMLongBench-Doc for long document analysis. On the CharXiv benchmark for scientific charts, it reached 90.5 percent on detail tasks and 66.2 percent on complex logic questions.

However, it is not a clean sweep. In the complex MMMU-Pro test, Quen3-VL scored 69.3 percent, lagging behind GPT-5’s 78.4 percent. Commercial competitors also generally lead in video QA benchmarks. The data shows that Qwen3-VL is expert in visual mathematics and documents, but still lags behind in general reasoning.

Major technological advances for multimodal AI

The technical report outlines three main architectural upgrades. First, “Interleaved MROPE” replaces the previous position embedding method. Instead of grouping mathematical representations by dimension (time, horizontal, vertical), the new approach distributes them evenly across all available mathematical fields. The goal of this change is to boost performance on longer videos.

recommendation

Second, DeepStack technology allows the model to access intermediate results from the vision encoder, not just the final output. This gives the system access to visual information at different levels of detail.

Third, a text-based timestamp system replaces the complex T-RoPE method found in Qwen2.5-VL. Instead of specifying a mathematical timing position for each video frame, the system now “<3.8 सेकंड>” directly into the input. This simplifies the process and improves the model’s understanding on time-based video tasks.

Training at scale with a trillion tokens

Alibaba trained the model on up to 10,000 GPUs in four stages. After learning to link images and text, the system underwent full multimodal training on approximately one trillion tokens. Data sources include web scrapes, 3 million PDFs from common crawls, and over 60 million STEM tasks.

In later stages, the team gradually expanded the reference window from 8,000 to 32,000 and eventually to 262,000 tokens. “Thinking” variants received specific thought-chain training, allowing them to clearly map out reasoning steps for better results on complex problems.

Open Load under Apache 2.0

All Qwen3-VL models released since September are available under the Apache 2.0 license with open weights on the hugging face. The lineup includes compact variants ranging from 2B to 32B parameters, as well as specialist blend models: 30B-A3B and the spacious 235B-A22B.

While features like extracting frames from longer videos aren’t new — Google’s Gemini 1.5 Pro tackles it in early 2024 — the Qwen3-VL offers competitive performance in an open package. With the previous Qwen2.5-VL already common in research, the new model is likely to lead to open-source development.

<a href