Cloudflare recently suffered a major outage, shutting down a significant portion of the internet. He has written a useful summary of the design errors that led to the outage. when something similar happened recently AWSI wrote a detailed analysis of what else could go wrong in that process, along with some indicators.

Today, I’m not going to model in so much detail, but there are some questions raised by a system-theoretical model of the system that I didn’t find answers to in the accident summary published by Cloudflare, and I would like to know the answers if I put Cloudflare between itself and its users.

In short, the blog post and the fixes suggested by Cloudflare mention a lot of control paths, but very few feedback paths. This is confusing to me, because it seems that the main problems in this accident were not due to lack of control.

The initial protocol mismatch in the feature file is a feedback problem (getting an overview of internal protocol conformance), and during a crash they Was Control actions required to fix the problem: Copy the old features file. The reason they couldn’t do this right away was because they didn’t know what was going on.

Thus, the important two questions are

- Does the Cloudflare organization intentionally design the human-computer interface used by its operators?

- Does Cloudflare actively think about how their operators can get a better understanding of the ways their systems work, and don’t work?

The blog post suggests not.

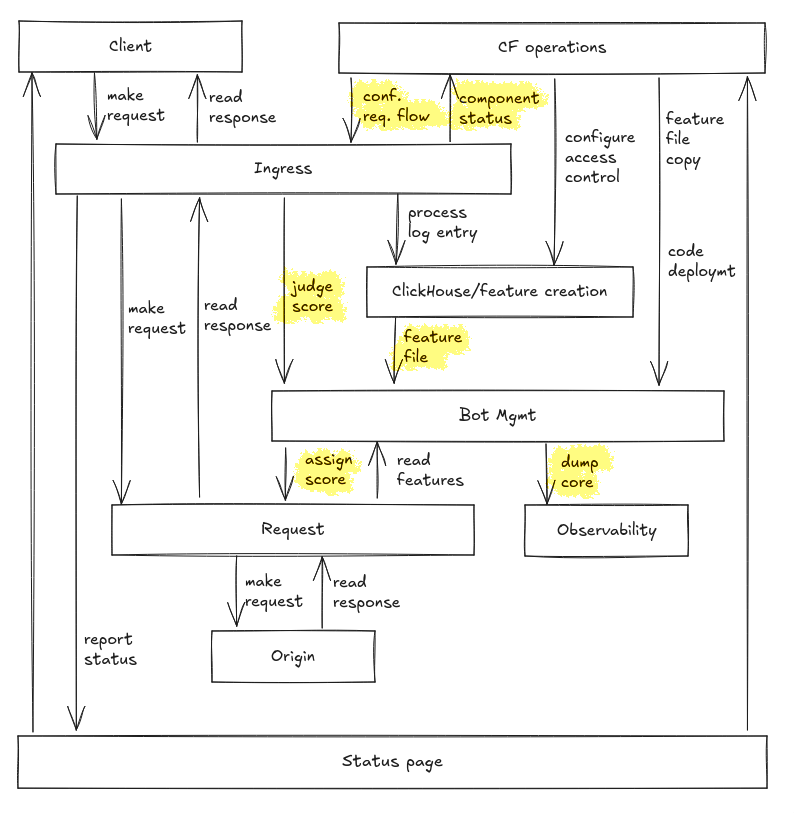

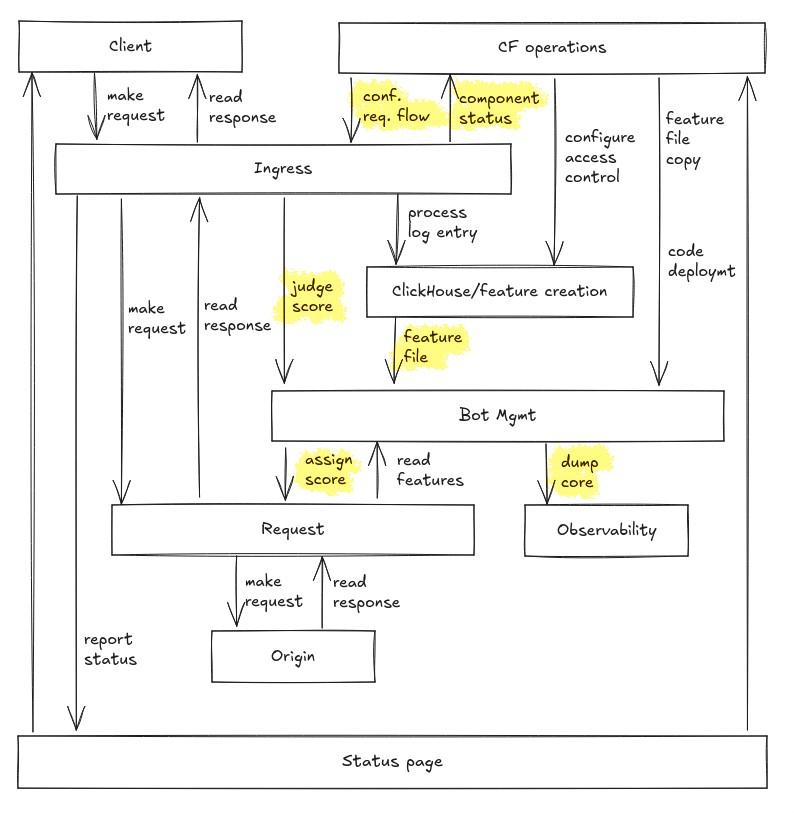

There are more questions for those interested in details. First of all, it’s a simplistic control model because I can put it together in just a few minutes. We will focus on the highlighted control actions as they were closest to the accident in question.

storm through STPA Treated too casually, we will come across many questions which are not raised in the report. Some of these questions may be clearly answered in the Cloudflare control panel or help documentation. I’m not in the market right now so I won’t do that research. But if any of my readers are thinking about adopting Cloudflare, these are things they may want to consider!

- What if the bot takes too long to assign a management score? Does the request go to the original source after a default timeout, or is the request rejected by default? Is there no timeout, and Cloudflare just holds the request until the client gets tired of waiting?

- Depending on how the bot management is built and how it interacts with timeouts, could it provide a point for a request that has left the system, i.e. already sent to the origin or even responded to the client? What are its effects?

- What happens if the bot tries to read features from a request that is missing from the management system?

- Can I call ingress score decisions when bot management is not running? What are its effects? What if Ingress finds that bot management has not allocated any points, even though it has?

- When there are problems processing requests how are they treated – are they passed or rejected?

- Feature file is a protocol used to communicate between services. Is this protocol (and any other such protocols) well specified? Are the engineers working on both sides of the communication aware of this? How does Cloudflare track compliance with internal protocol implementations?

- How long can bot management go on with an old feature file before anyone realizes? Is there a way to ignore the feature file created for bot management? What features will be made known to the file generator?

- Can the feature file generator create a feature file that does not indicate botness? Can bot management set aside some of these cases and choose not to apply scores derived from such features? Does the Feature File Generator get that feedback?

- What is the process by which Cloudflare operators can reconfigure the request flow, for example toggle off misbehaving components? But perhaps more seriously, what kind of information will they base such decisions on?

- What is the feedback path to Cloudflare operators from observation devices annotating the core dump with debugging information? They consume significant resources, but are the results mostly thrown away where no one pays attention?

- Besides the incidentally missing status page, what other pieces of misleading information did Cloudflare operators have to deal with? How can it be reduced?

I don’t know. I would like technical organizations to work more thoroughly in investigating accidents.