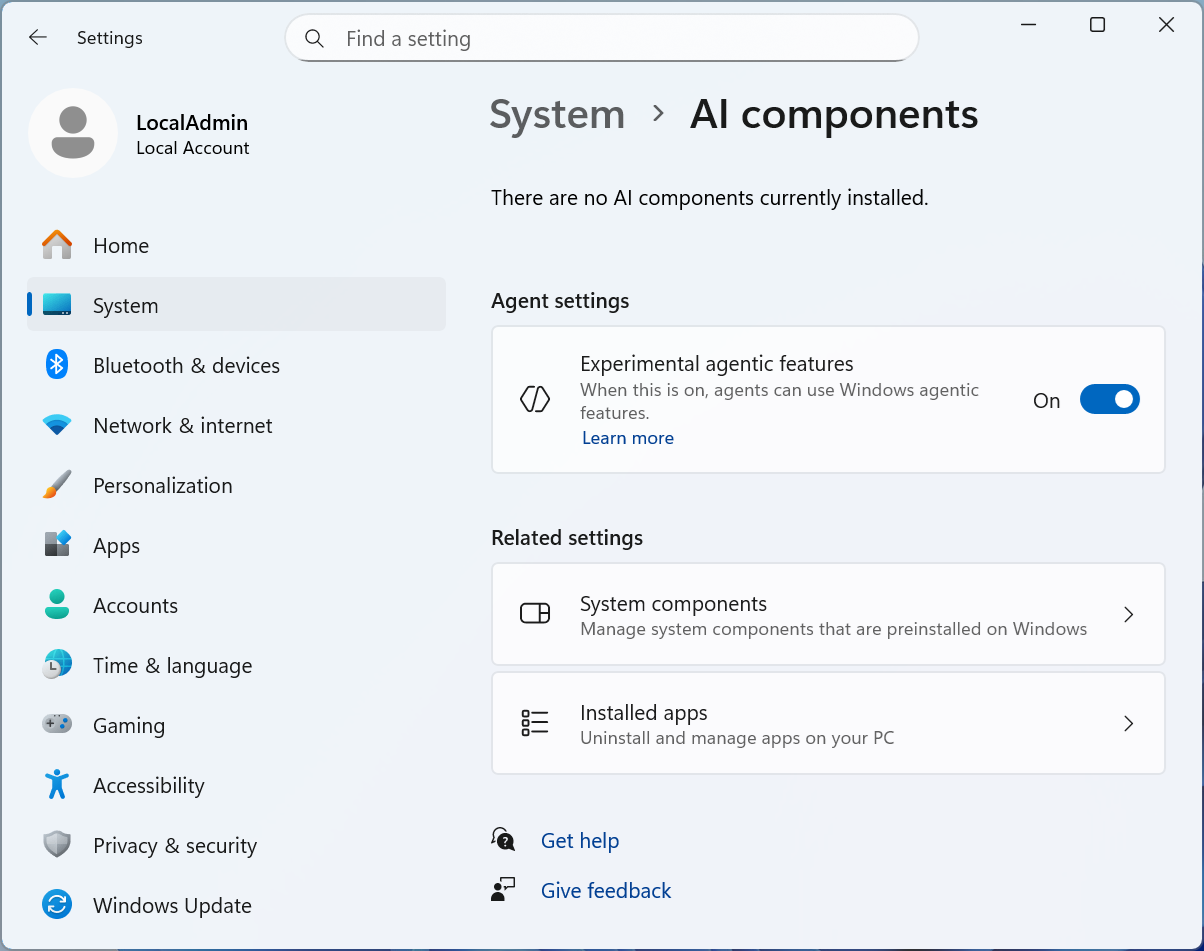

Microsoft has been adding AI features to Windows 11 for years, but things have recently entered a new phase, with generative and so-called “agent” AI features working deeper into the operating system’s foundation. A new build of Windows 11 released yesterday to Windows Insider Program testers includes a new “Experimental Agentic Features” toggle in Settings to support a feature called Copilot Actions, and Microsoft has published a detailed support article explaining how those “Experimental Agentic Features” will work.

If you’re not familiar, “agents” is a basic term that Microsoft has used repeatedly to describe its future ambitions for Windows 11 – in simple language, these agents are meant to complete specified tasks in the background, allowing the user’s attention to be focused elsewhere. Microsoft says it wants agents to be able to perform “everyday tasks like organizing files, scheduling meetings or sending emails” and that Copilot Actions will give you “an active digital assistant that can perform complex tasks for you to increase efficiency and productivity.”

But like other types of AI, these agents can be prone to error and confusion and will often proceed as if they know what they are doing, even if they don’t. They also present, in Microsoft’s own words, “novel security risks”, mostly related to what might happen if an attacker is able to command one of these agents. As a result, Microsoft’s implementation walks a tight rope between giving these agents access to your files and isolating them from the rest of the system.

Potential risks and attempted solutions

For now, these “experimental agentive features” are optional, available only in early test builds of Windows 11, and turned off by default.

Credit: Microsoft

For example, AI agents running on PCs will be given their own user accounts separate from your personal account, ensuring that they are not allowed to make changes. Everything on the system and giving them their own “desktop” to work on won’t interfere with what you’re doing on your screen. Users are required to approve requests for their data, and “all actions by the agent are observable and separate from actions taken by the user.” Microsoft also says agents should be able to generate logs of their activities and “provide a means to monitor their activities,” including showing users a list of actions they will take to complete a multi-step task.