The launch of Nvidia’s Vera Rubin platform for AI and HPC next year could mark significant changes in the AI hardware supply chain as Nvidia plans to ship its partners with fully assembled Level-10 (L10) VR200 compute trays with all compute hardware, cooling systems, and interfaces pre-installed, according to JPMorgan (via @Jukanlosreve). The move will cause major ODMs to do much less design or integration work, making their lives easier, but will also reduce their margins in Nvidia’s favor. Information remains informal at this stage.

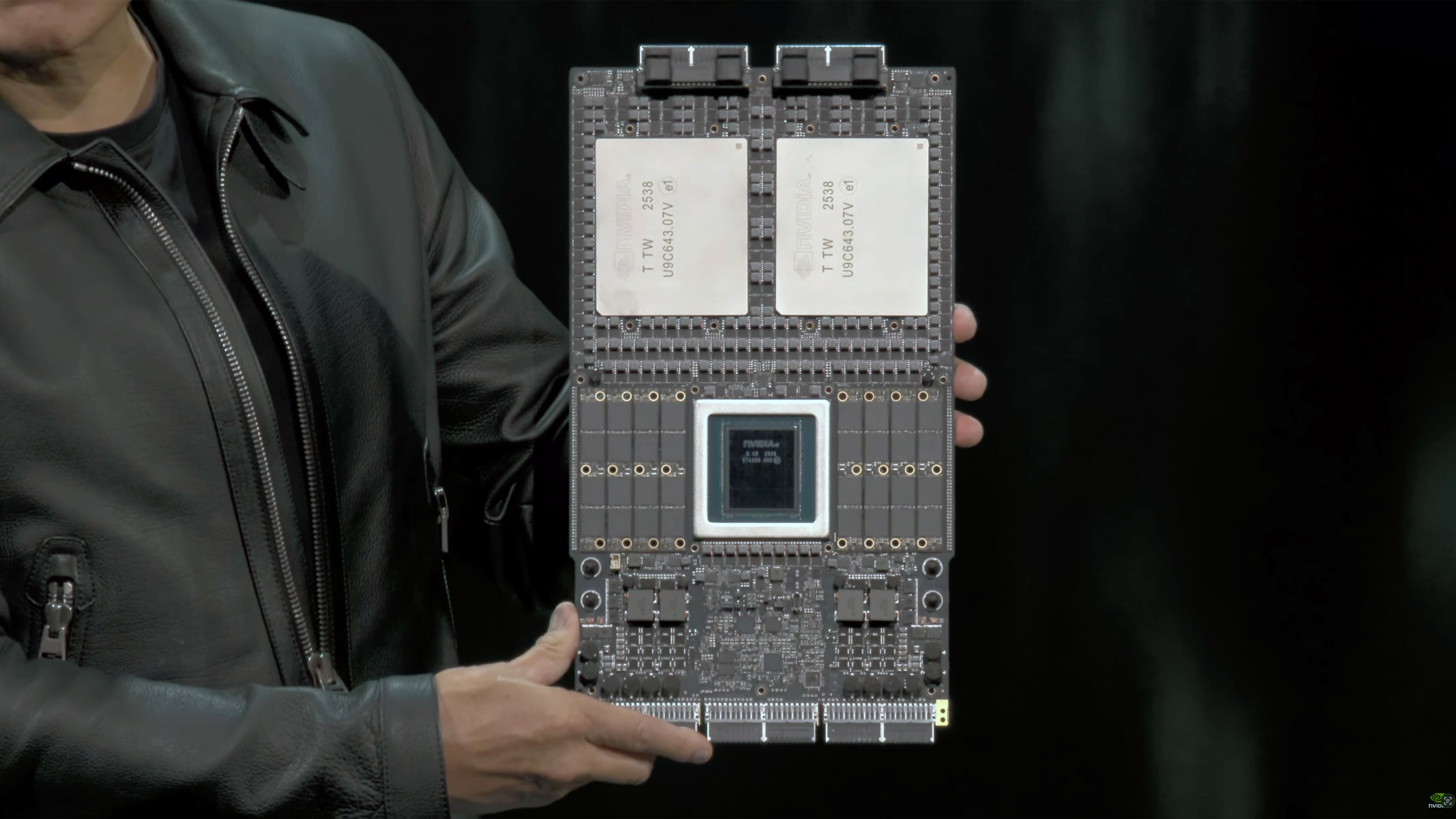

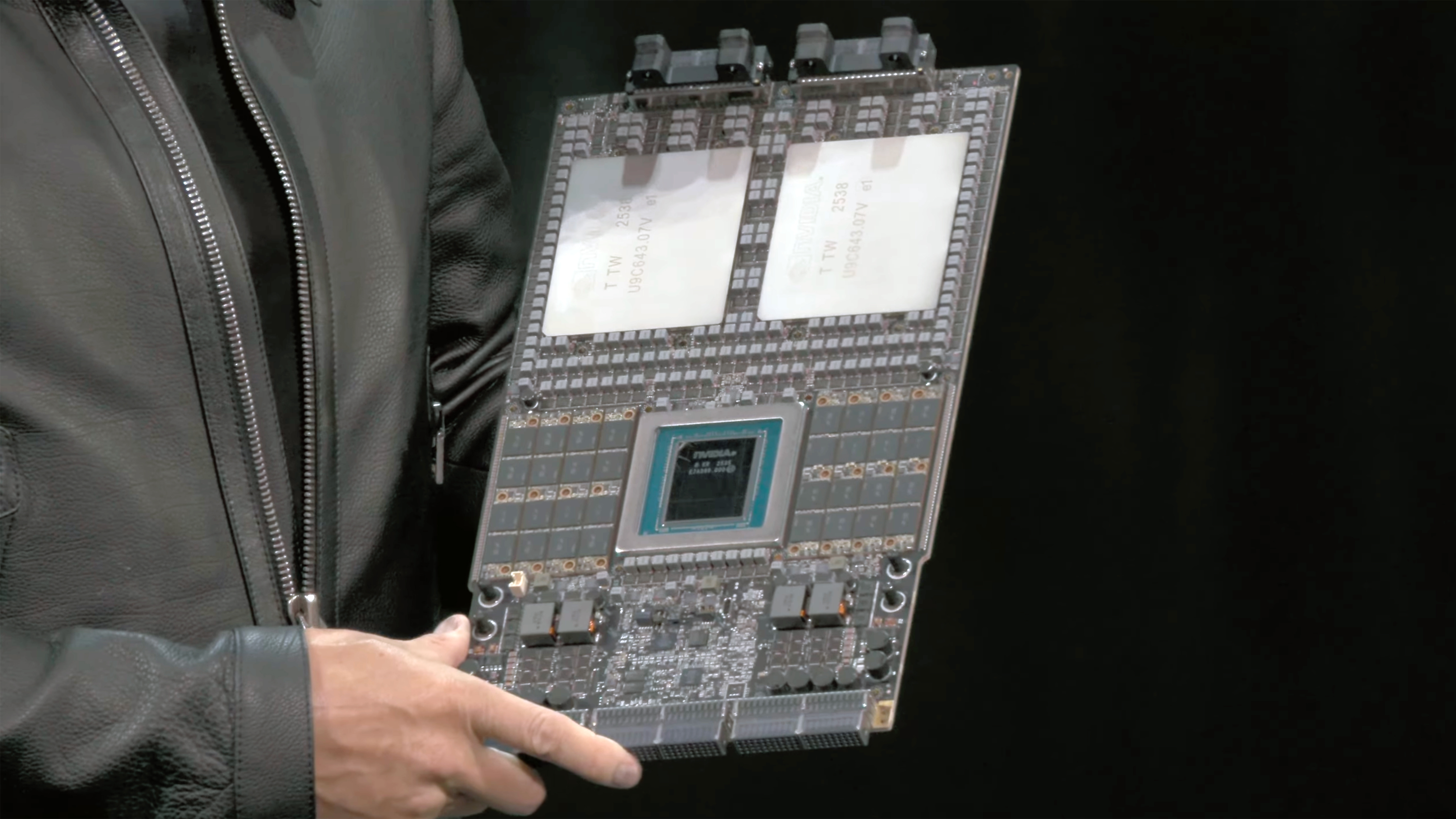

Starting with the VR200 platform, Nvidia is reportedly preparing to take over production of fully built L10 compute trays with Vera CPUs, Rubin GPUs, and a cooling system pre-installed, rather than allowing hyperscalers and ODM partners to build their own motherboards and cooling solutions. This wouldn’t be the first time the company has supplied partially integrated server sub-assemblies to its partners: it did so with its GB200 platform when it supplied the entire Bianca board with key components already installed. However, at the time, this could have been considered an L7 – L8 integration, while now the company is reportedly considering moving up to L10, with the entire tray assembly – accelerator, CPU, memory, NIC, power-delivery hardware, midplane interface and liquid-cooling cold plates – being sold as a pre-built, tested module.

The move promises to shorten the ramp for the VR200 as Nvidia’s partners won’t have to design everything in-house and production costs could be lower due to the amount of scale ensured by the direct contract between Nvidia and EMS (possibly Foxconn as the primary supplier and then Quanta and Wistron, but that’s speculation). For example, the Vera Rubin Superchip board recently demonstrated by Jensen Huang uses a very complex design, a very thick PCB, and only solid-state components. Designing such a board takes time and a lot of money, so it makes a lot of sense to use select EMS providers to build it.

JPMorgan has reportedly increased the power consumption of a Rubin GPU from 1.4 kW (Blackwell Ultra) to 1.8 kW (R200) and even 2.3 kW (allegedly a previously undisclosed TDP for an unannounced SKU (Nvidia denied)). Tom’s Hardware Request for comment on the matter) and cited increased cooling requirements as one of the motivations for moving to supplying complete trays rather than separate components. However, we know from reported supply chain sources that various OEMs and ODMs, as well as hyperscalers like Microsoft, are experimenting with very advanced cooling systems, including immersion and embedded cooling, which underlines their experience.

However, Nvidia’s partners will move from system designers to system integrators, installers, and support providers. They’re going to keep the enterprise features, service contracts, firmware ecosystem functions and deployment logistics, but the ‘heart’ of the server – the compute engine – is now fixed, standardized and manufactured by Nvidia rather than OEM or ODM.

Beyond that, we can only wonder what will happen with Nvidia’s Kyber NVL576 rack-scale solution based on the Rubin Ultra platform, which is set to launch with the emergence of 800V data center architectures to enable megawatt-class racks and beyond. The only question now is whether Nvidia will further increase its share in the supply chain for rack-level integration?

to follow Tom’s Hardware on Google NewsOr Add us as a favorite sourceTo get our latest news, analysis and reviews in your feed.