LetRxiv is the successor to Papers with Code after the latter’s shutdown.

quick summary

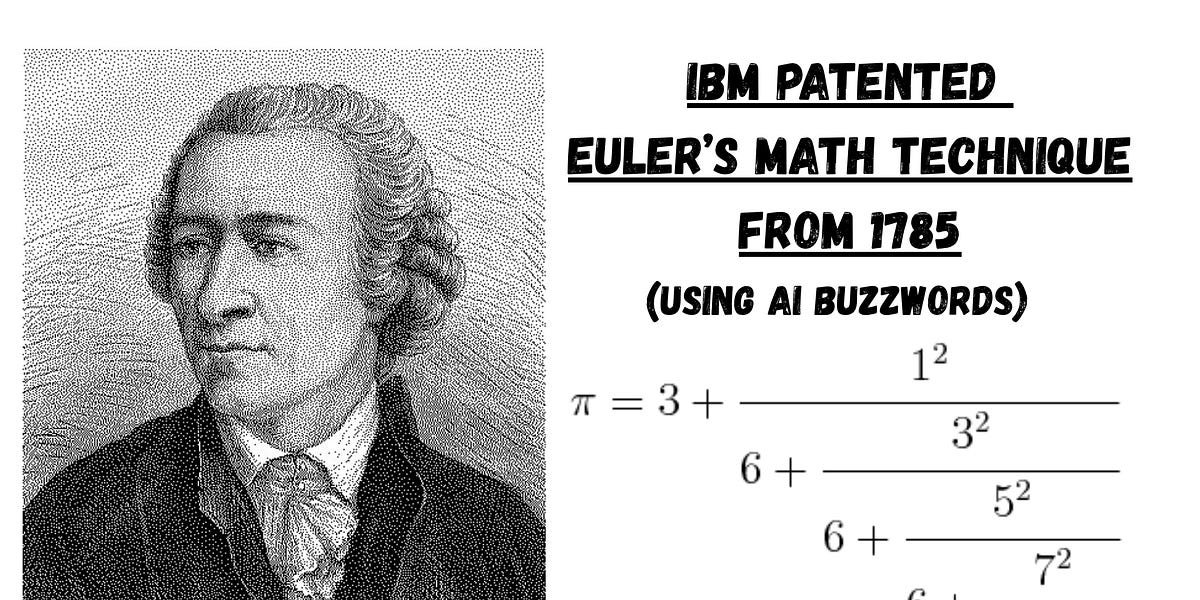

IBM holds a patent on the use of derivatives to find convergence of a generalized continued fraction.

Here’s the odd thing: all they did was implement a number theory technique by Gauss, Euler and Ramanujan in PyTorch and call backward() on the computation graph.

Now IBM’s patent trolls can fare on a math technique that has existed for more than 200 years.

As always, the code is available here google collab And GitHub,

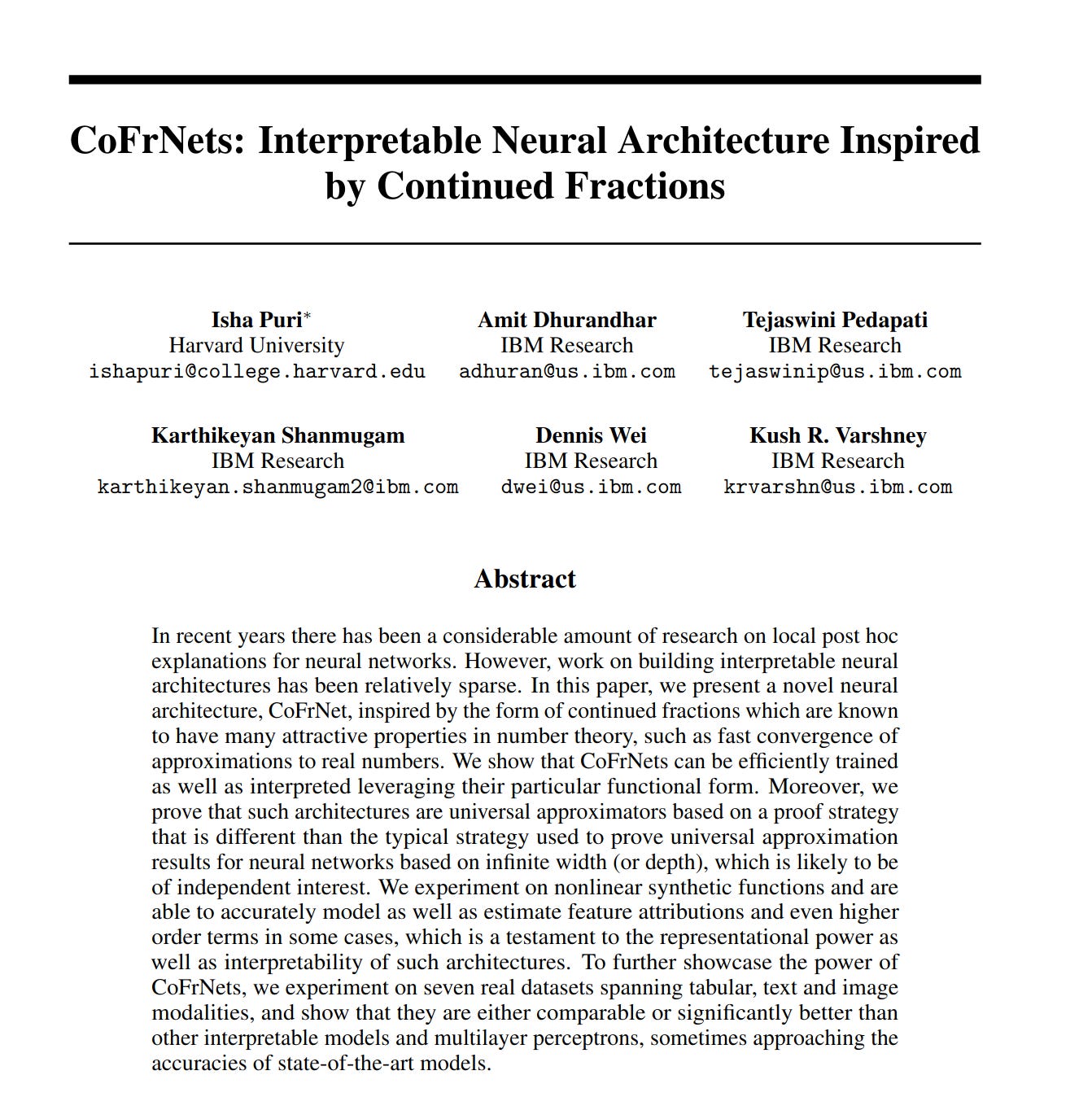

2021 paper CoFrNets: interpretable neural architecture inspired by continuous fractions (Puri et al., 2021) Investigates the use of continued fractions in neural network design.

The paper took 13 pages to make this claim: Continuous fractions (like MLPS) are universal approximations.

Writers reinvented the wheel countless times:

-

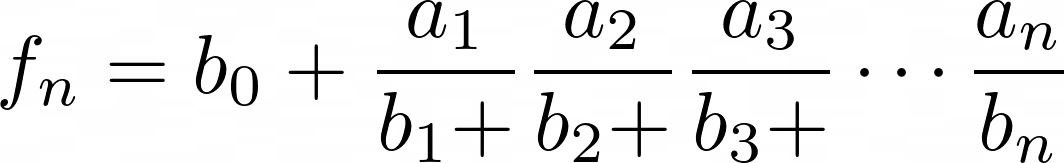

He converted continued fractions into ‘ladders’.

-

They call the fundamental bifurcation the ‘1/z nonlinearity’.

-

Ultimately, they take the well-defined concept of generalized continued fractions and call them CoFrNets.

To be honest, the newspaper is full of pretentious nonsense like this:

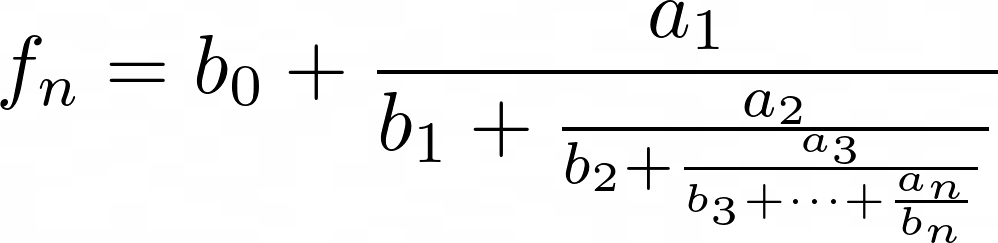

simple continued fractions Mathematical expressions of the form are:

Where? Pn , Whyn Is nth convergence (Cook, 2022),

Continued fractions have been used by mathematicians:

-

Estimated Pie (MJD, 2014),

-

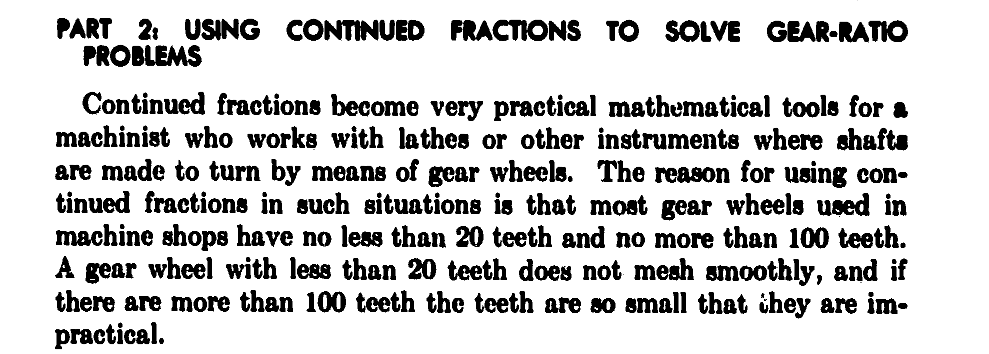

Design Gear System (Brocott, 1861)

-

Even Ramanujan’s mathematics tricks used continued fractions (Barrow, 2000).

Continued fractions have been well studied and covered in previous LeeRxiv guides (Lehmer, 1931): continued fraction factorization method And Stern–Brocot fractions as floating-point alternatives,

If your background is in AI, a constant fraction looks exactly like a linear layer but the bias term is replaced by another linear layer.

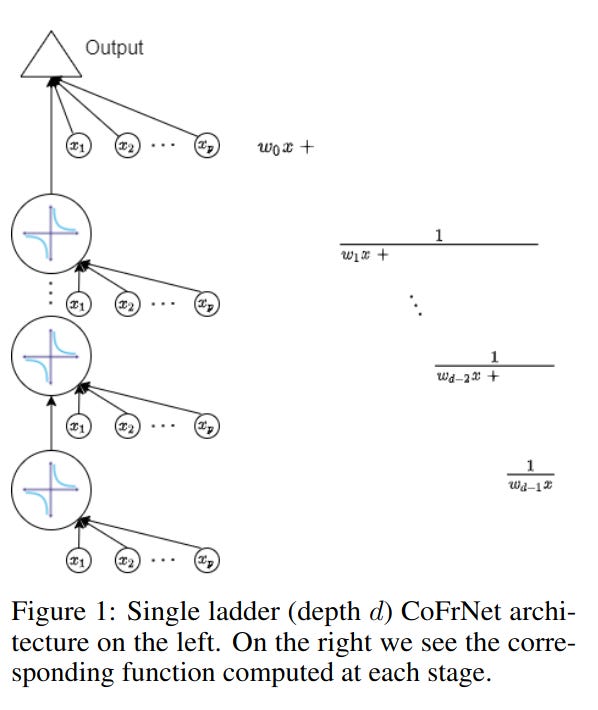

(Jones, 1980) defines generalized continued fraction As an expression of form:

More economically written as:

Where a and b can be integers or polynomials.

The authors simply implement a continued fraction library in PyTorch and call the backward() function on the resulting computation graph.

That is, they concatenate linear neural network layers and use reciprocity (not RELU) as the primary nonlinearity.

Then they replace the bias term of the current linear layer with another linear layer. This is a generalized continued fraction.

In Pytorch, their architecture is as follows:

import torch

import torch.nn as nn

import torch.optim as optim

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

import numpy as np

class CoFrNet(nn.Module):

def __init__(self, input_dim, num_ladders=10, depth=6, num_classes=3, epsilon=0.1):

super(CoFrNet, self).__init__()

self.depth = depth

self.epsilon = epsilon

self.num_classes = num_classes

#Linear layers for each step in each ladder

self.weights = nn.ParameterList([

nn.Parameter(torch.randn(num_ladders, input_dim)) for _ in range(depth + 1)

])

#Output weights for each class

self.output_weights = nn.Parameter(torch.randn(num_ladders, num_classes))

def safe_reciprocal(self, x):

return torch.sign(x) * 1.0 / torch.clamp(torch.abs(x), min=self.epsilon)

def forward(self, x):

batch_size = x.shape[0]

num_ladders = self.weights[0].shape[0]

# Compute continued fractions for all ladders

current = torch.einsum(’nd,bd->bn’, self.weights[self.depth], x)

# Build continued fractions from bottom to top

for k in range(self.depth - 1, -1, -1):

a_k = torch.einsum(’nd,bd->bn’, self.weights[k], x)

current = a_k + self.safe_reciprocal(current)

# Linear combination for each class

output = torch.einsum(’bn,nc->bc’, current, self.output_weights)

return output

def test_on_waveform():

# Load Waveform-like dataset

X, y = make_classification(

n_samples=5000, n_features=40, n_classes=3, n_informative=10,

random_state=42

)

# Split data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Standardize

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

# Convert to torch tensors

X_train = torch.FloatTensor(X_train)

X_test = torch.FloatTensor(X_test)

y_train = torch.LongTensor(y_train)

y_test = torch.LongTensor(y_test)

# Model

input_dim = 40

num_classes = 3

model = CoFrNet(input_dim, num_ladders=20, depth=6, num_classes=num_classes)

# Training

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

epochs = 100

batch_size = 64

for epoch in range(epochs):

model.train()

permutation = torch.randperm(X_train.size()[0])

for i in range(0, X_train.size()[0], batch_size):

indices = permutation[i:i+batch_size]

batch_x, batch_y = X_train[indices], y_train[indices]

optimizer.zero_grad()

outputs = model(batch_x)

loss = criterion(outputs, batch_y)

loss.backward()

optimizer.step()

# Validation

if epoch % 10 == 0:

model.eval()

with torch.no_grad():

train_outputs = model(X_train)

train_preds = torch.argmax(train_outputs, dim=1)

train_acc = (train_preds == y_train).float().mean()

test_outputs = model(X_test)

test_preds = torch.argmax(test_outputs, dim=1)

test_acc = (test_preds == y_test).float().mean()

print(f’Epoch {epoch:3d} | Loss: {loss.item():.4f} | Train Acc: {train_acc:.4f} | Test Acc: {test_acc:.4f}’)

print(f”\nFinal Test Accuracy: {test_acc:.4f}”)

return test_acc.item()

if __name__ == “__main__”:

accuracy = test_on_waveform()

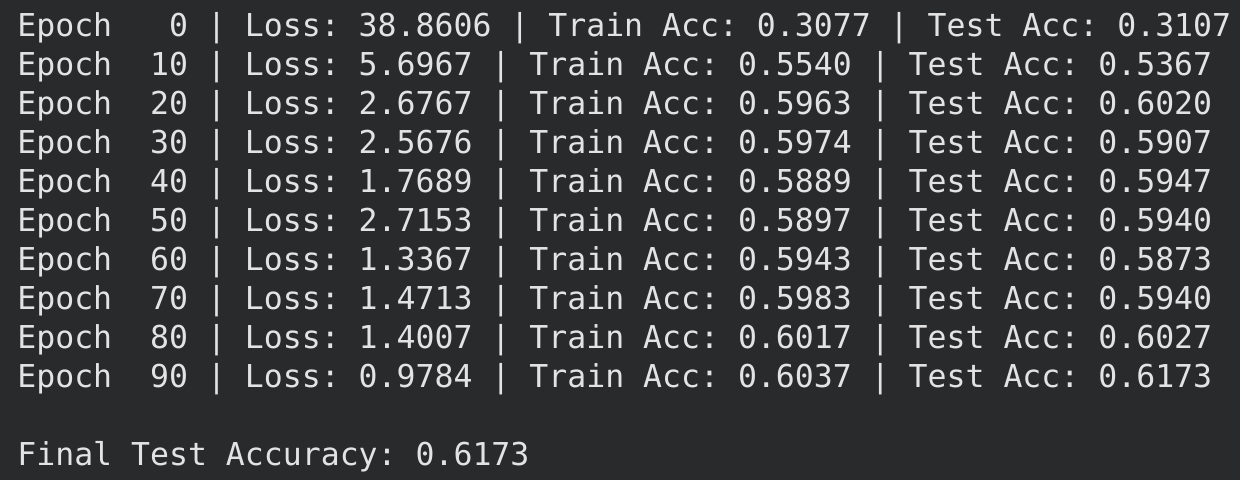

print(f”CoFrNet achieved {accuracy:.1%} accuracy on Waveform dataset”)When tested on a non-linear waveform dataset, we see these results:

Accuracy of 61%.

Nowhere near SOTA and that’s to be expected.

Continued fractions are well studied and any number theorist will tell you that gradients vanish i.e. the variation of power series has limits.

Even Euler’s original work (Euler, 1785) Point out the fact: It is an infinite series so optimization by differentiation has its limits.

Pytorch’s AutoDiff engine transforms differential series with a different computational graph.

The authors simply implemented a continuous fraction library in Pytorch and, as expected, they saw that the gradient could be optimized.

As reviewers have noted, the idea seems new but the technology is nowhere near SOTA and the truth is, continued variants have existed for some time. They simply replace the linear layers of the neural network with normalized continuous fractions.

Here’s the strange result: Author filed for patent in 2022 on their ‘discussion-oriented’ paper.

their The patent was published And its status is marked as Pending.

Here’s the thing:

-

Continuous fractions have been in existence longer than IBM.

-

Differentiation of continued fractions is well known.

-

The authors did not deviate anything from Euler’s 1785 work.

Now, if IBM feels like litigating they can sue Sage, Mathematica, Wolfram or even you for coding 249 year old math technology.

-

Mechanical Engineer, Robotics and Industrialist

Taken from page 30 of Introduction to continued fractions (Moore, 1964) -

pure mathematician and mathematics teacher

I have a PhD in mathematics and I learned about patency while investigating continued fractions and their relation to elliptic curves (van der Porten, 2004).,

I was trying to model an elliptic divisibility sequence in Python (using Pytorch) and that’s how I found out about IBM’s patent.

Summary of the 2004 paper elliptic curves and continuous fractions (van der Porten, 2004) -

Numerical Analyst and Computation Scientist/Sage and Maple Programmer

numerical analysis is the use of computer algorithms for approximate solutions to mathematics and physics problems (Shi, 2024),

Continued fractions are used in error analysis when evaluating integrals and entire books describe these algorithms (Cuyt et al., 2008).,