This blog reflects the collaborative effort of Qudo’s research team to design, build, and validate the benchmark and this analysis.

This blog introduces Qido’s Code Review Benchmark 1.0, a rigorous methodology developed to objectively measure and validate the performance of AI-powered code review systems, including cudo git code review. We address key limitations in existing benchmarks, which rely primarily on backtracking from fix commits to buggy commits, thereby focusing on bug detection while neglecting essential code quality and best practice enforcement. Furthermore, previous methods often use isolated small commits instead of simulating a full pull request (PR) review scenario on real, merged PRs, and are constrained by a smaller scale of PRs and issues.

Our research sets a new standard by intentionally injecting defects into real, merged pull requests obtained from active, production-grade open-source repositories.

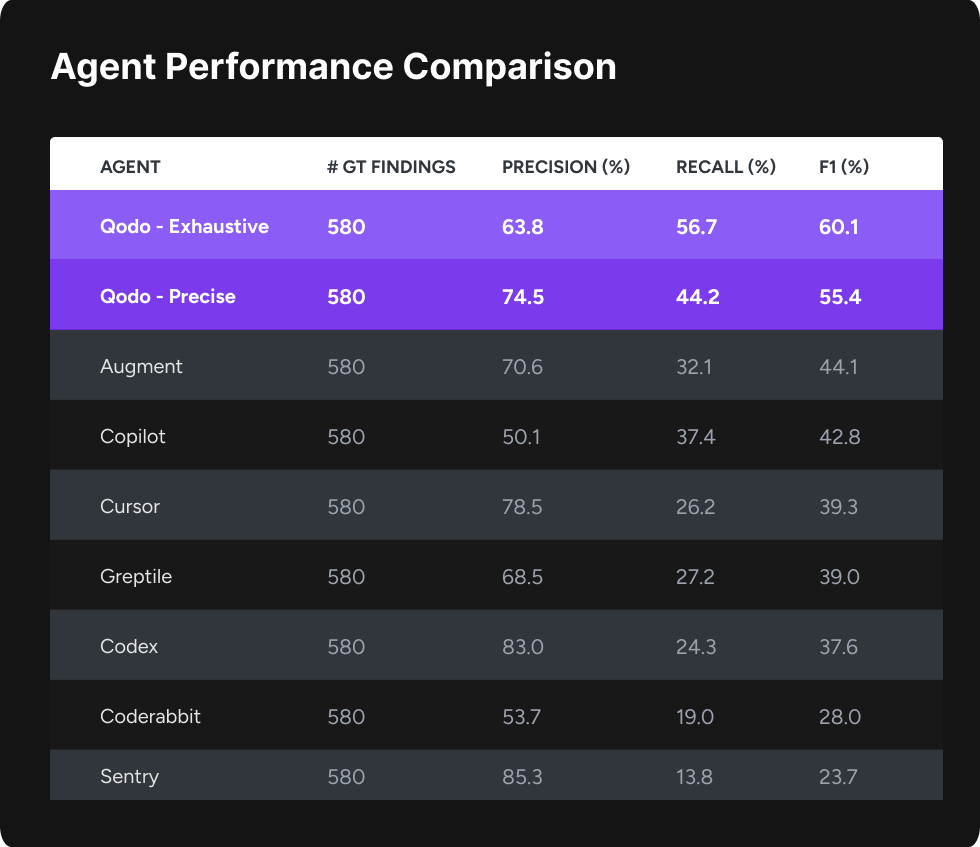

This innovative approach is uniquely designed to simultaneously evaluate both CCode correctness (bug detection) and code quality (best practice enforcement) in a realistic code review context and on a fairly large scale – 100 PRs covering a total of 580 issues. In a comparative assessment pitting the Qodo model against 7 other leading AI code review platforms, Qodo demonstrated superior performance, achieving an F1 score of 60.1% to reliably identify this diverse set of defects. Benchmarks, including reviews evaluated by the tool, are publicly available in our benchmarks GitHub organization. The following sections detail our benchmark creation methodology, experimental setup, comparative results, and key findings from this evaluation.

related work

While there are many benchmarks for AI code generation and bug fixing, SWE‑Bench being the most famous, the code review domain has historically lacked robust evaluation datasets. Graptile took an important first step by creating a benchmark based on backtracking from fix commits to measure whether AI tools can catch bugs that have historically been fixed. Augment also used this approach to evaluate several AI code review tools. These methods are effective at detecting real bugs, but are limited in scale, often containing only one bug per commit review, and do not capture the size, complexity, or context of full pull requests.

Cudo takes a different approach by starting with the actual, merged PR and covering a range of issues, including both functional bugs and best practice violations. This enables the creation of larger, more realistic benchmarks for testing AI tools in system-level code review scenarios, capturing not only correctness but also code quality and compliance. By combining large-scale PR with dual-focus evaluation, Qudo provides a more comprehensive and practical benchmark than previous work, reflecting the full spectrum of challenges encountered in real-world code review.

Methodology

The Cudo code review benchmark is constructed through a multi-step process of injecting complex and non-obvious defects into real-world, merged pull requests (PRs) from active open-source repositories. This controlled injection approach is basically designed to simultaneously evaluate both the main objectives of a successful code review: code correctness (exploring issues) and code quality (Best Practice Enforcement). This integrated design allows us to create the first comprehensive benchmark that measures the full spectrum of AI code review performance, moving beyond traditional evaluations that focus mostly on isolated bug types.

Importantly, this injection-based approach is inherently scalable and repository-agnostic. Because this process runs on actual merged PRs and extracts repository-specific best practices before injection, benchmark generation can be applied to large amounts of PRs and to any codebase, open-source or private. This makes the framework a general mechanism for generating high quality code review evaluation data at large scale.

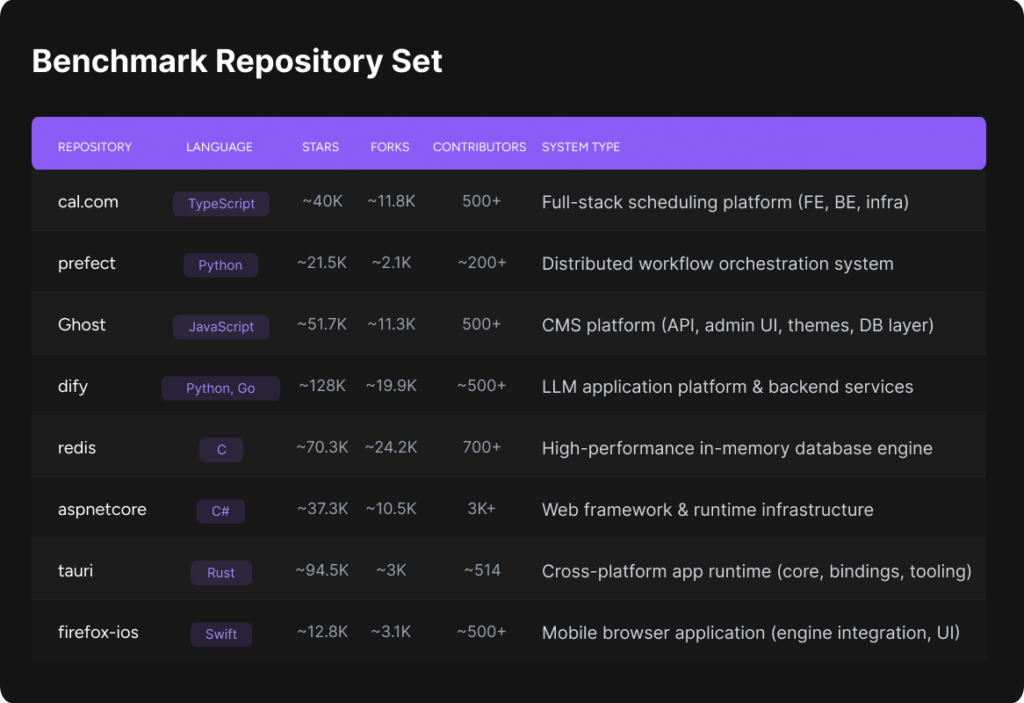

- benchmark treasures was strategically chosen to create a realistic, system-level code review environment. The main selection criteria focus on two aspects: 1) System-level code: Projects are production-grade, multi-component systems (e.g., server logic, FE, DB, API) that reflect the complexity of enterprise pull requests involving cross-module changes and architectural concerns. 2) Language Diversity: The set intentionally spans diverse technology stacks and ecosystems. Including TypeScript, Python, JavaScript, C, C#, Rust, and Swift.

- Repository Analysis and Rule Extraction: We first analyze each target repository to collect and formalize a set of best practice rules. These rules have been carefully crafted to align with the repository’s current coding standards, style guides, and contribution guidelines, extracted from documentation and full code base analysis using an agent-based parsing process and human verification.

- PR collection and filtering: PRs from targeted open-source repositories are collected via the GitHub API and subjected to strict technical filters (e.g., 3+ files, 50–15,000 lines changed, recently merged). To ensure the highest quality of ‘clean’ code for injection, we specifically select merged PRs Without Revert later or commit immediate followup fix. After identifying all quality candidates, we verify that the selected PRs conform to the extracted best practice rules First Any compliance issues are injected.

- Violation Injection (Compliance Phase): Compliance violations are injected into each filtered, compliant PR using LLM, which corrupts the difference while preserving the original functionality.

- Problems Injection (additional bug steps): An additional 1-3 functional/logical bugs are injected. These issues span across a variety of categories, including logical errors, edge case failures, race conditions, resource leaks, and improper error handling, creating a final benchmark instance with multiple faults simultaneously for the AI model to detect.

- Ground truth verification: Following all injection steps, we double verify all modified PRs. Any additional, naturally occurring subtle issues or best practice violations found during this final check are manually added to the ground truth list, ensuring the accuracy of the benchmark and broad coverage of realistic defects.

Evaluation

to install

The evaluation setup was designed to closely reflect the production development environment:

- Repository Environment: All benchmark pull requests (PRs) were opened on a clean, forked repository. Before opening the PR, we made sure that the repository-specific best practice rules were formally committed to the root directory, in the AGENTS.md file, giving them access to all participating tools for compliance checking.

- layout: Each of the 7 evaluated code review tools were configured using their default settings to automatically trigger review upon PR submission.

- Execution and Verification: All benchmark PRs were opened systematically. We monitored all tools to confirm they were running without errors, restarting reviews when necessary to ensure complete coverage.

- data collection: Inline comments generated by each tool were carefully collected.

- performance measurement: The collected findings, inline observations generated by each tool were rigorously compared with our validated ground truth using the LLM-A-Judge system. The main metrics calculated are defined as:

- Hit definition: An inline comment generated by the tool is classified as a “hit” (true positive) if two criteria are met:

- Comment text accurately describes the underlying problem

- The localization (file and line number references) is correct and points to the source of the problem.

- False Positive (FP): An observation is classified as a false positive if it does not match any of the issues in the ground truth list.

- False Negative (FN): An issue in the ground truth list is classified as a false negative if a tool-generated annotation does not meet the criteria of a “hit” for that specific issue.

- Memorization: Calculated as the rate of ground truth issues detected by the tool.

- accuracy: Calculated as the rate of tool-generated observations that correctly match the ground truth issue.

- F1 Score: Harmonic Mean of Precision and Recall

- Hit definition: An inline comment generated by the tool is classified as a “hit” (true positive) if two criteria are met:

Result

The results reveal a clear and consistent pattern across all instruments. While many agents achieve very high precision, this comes at the cost of extremely low recall, meaning they identify only a small fraction of the actual issues present in the PR. In practice, these tools are conservative: they flag only the most obvious problems to avoid false positives, but miss a large portion of subtle, system-level and best-practice violations. This behavior increases accuracy while severely limiting actual review coverage.

In contrast, Kyudo exhibits the highest recall by a significant margin, while still maintaining competitive accuracy, resulting in the best overall F1 score. This indicates that Qodo is able to detect a much broader portion of ground truth issues without imposing noise on the user. Importantly, precision is a dimension that can be tuned according to user preference post-processing (e.g., strict filtering of findings), while recall is fundamentally constrained by the system’s ability to deeply understand the codebase, cross-file dependencies, architectural context, and repository-specific parameters.

For Qodo, we report the results of two operating configurations: Qodo Precise, which reports only issues that clearly require developer action, and Qodo Exhaustive, which is optimized for maximum coverage and recall. Notably, both configurations achieve higher F1 scores than all competing devices in this evaluation. The results reveal a clear and consistent pattern across all instruments. While many agents achieve very high precision, this comes at the cost of extremely low recall, meaning they identify only a small fraction of the actual issues present in the PR. In practice, these tools are conservative: they flag only the most obvious problems to avoid false positives, but miss a large portion of subtle, system-level and best-practice violations. This behavior increases accuracy while severely limiting actual review coverage.

appendix

Selected Repository

The selected repositories cover a broad and representative distribution of programming languages and systems disciplines, including full-stack web applications, distributed systems, databases, developer platforms, runtime infrastructures, and mobile applications, with complexity profiles ranging from single-language projects (Redis in C, Tauri in Rust) to polyglot systems (DyFi with Python/Go, AspNetCore with C#/JavaScript), reflecting the diversity of how they code in different paradigms. Reviews make suitable candidates for benchmarking. Real-world codebases encountered in modern software development.

<a href