March 2023

In the previous article Fascination with AWK we discussed why AWK is great for prototyping and is often the best alternative to Shell and Python. In this article I want to show you some interesting technical facts I learned about AWK.

gc deficiency

AWK was designed to not require a GC (garbage collector) for its implementation. Well, just like sh/bash. (I learned this remarkable fact from the Oilshell blog – a rather interesting technical blog, where the author describes his progress in creating a “better bash”).

The most important consequence is that returning an array from a function is prohibited, you can only return a scalar value.

function f()

However, you can pass an array to a function and fill it in there

BEGIN

function fill(arr, i) tryParse1("123456789", res) && (tryParseDigits(res)

The thing is, in the constraints of GC all heap allocations must be deterministic. That is, an array declared locally in a function must be destroyed at the same time as the function returns. That’s why it is not allowed to exit the declaration scope of a function (via return).

The absence of GC allows language implementations to be kept very simple, fast, and portable. Moreover, with predictable memory consumption. For me, this qualifies AWK as a full embeddable language, however, for some reason this place is occupied by (GC-equipped) Lua.

local variables

All variables are global by default. However, if you add a variable to the function parameter (e.g. i above) it becomes local. JavaScript works similarly, although there are more suitable var,let,const Keyword. In practice, it is customary to separate “real” function parameters from “local” parameters with additional spaces for clarity.

Although Brian Kernighan (the K in AWK) regrets this design, in practice it works fine.

The notation for function local is horrible (all my fault too, which makes it worse).

So it appears, the use of local variables is also a mechanism for automatic release of resources. Small example:

function NUMBER( res) 1)) &&

(tryParse1(".", res) ? tryParseDigits(res) : 1) &&

(tryParse1("eE", res) ? (tryParse1("-+",res)

NUMBER The function parses the number. res is a temporary array that will be automatically deleted when the function exits.

autovivification

An associative array is declared by the very fact of using the associated variables. arr As an array.

Similarly, a variable that is treated as a number (i++) will be explicitly declared as a numeric type, and so on.

To Perl experts, this feature may be known as autovivification. In general, AWK is clearly a prototype of Perl. You could even say that Perl is a kind of AWK overgrowth on steroids… however, we digress.

This, obviously, is done to be able to write the most compact code in one-liners, for which many of us are accustomed to using AWK.

About AWK syntax/grammar

I’d like to share some of the findings I encountered while implementing AWK’s parser for the Intellij-awk project.

$ is a unary operator

If you’ve used AWK, you’ve probably used $0, $1, $2Etcetera. some also used $NF,

But do you know that $ Is an operator that can be applied to an expression?

So it is absolutely justified to write this

{ second=2; print $second }

Or

Or

with the same result

Furthermore, it is also interesting to note $ is the only operator that is allowed to appear on the left side of the assignment, i.e. you can write

Or

{ $length("xx")="hello" }

(similar to)

Quiz. What will be the output of

echo "2 3 4 hello" | awk '{ print $$$$1 }'

And why? Try to answer without running away. try adding more $Explain behavior,

function calling f() does not give first place ( ,

…but only for user-defined functions:

awk 'BEGIN { fff () } function fff(){ }' # syntax error

awk 'BEGIN { fff() } function fff(){ }' # OK

You may have space for built-in functions:

awk 'BEGIN { print substr ("abc",1,2) }' # OK, outputs ab

Why such a strange inconsistency? This is due to AWK’s decision to use the empty operator for concatenating strings.

BEGIN { a = "hello"; b = "world"; c = a b; print c } # helloworld

This means that AWK tries to parse fff (123) as a combination of variables fff and string 123,

obviously fff () It’s just a syntax error, same as fff (1,2),

As far as built-in functions are concerned, AWK already knows it’s not a variable name, so it can disambiguate.

Built-in functions are parsed as part of the syntax

If you take a look at the AWK specification in the grammar section in POSIX (yes, the AWK grammar is a part of the POSIX standard!), you’ll see that AWK functions are part of it. To be precise, they are analyzed lexer step, then they enter parser Steps to prepare to use the token.

The implication here is that you are not allowed to name your own function with the name of any built-in function. This would be a syntax error!

BEGIN { length = 1 } # syntax error

Compare with Python:

Why is it like this? For flexibility. Remember, the main goal of AWK was to be an extremely concise but productive language that was suitable for one-liners. so:

- it is allowed to leave

()For built-in functions, when no arguments are passed, such asecho "hello" | awk '{ print length }'– similar toecho "hello" | awk '{ print(length()) }' - The same function can be used with different numbers of arguments, like

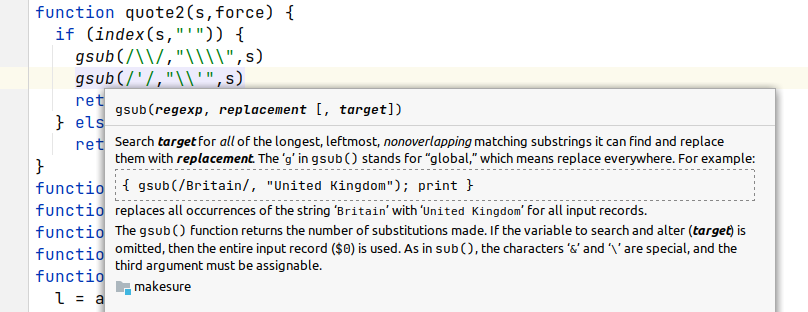

sub(/regex/, "replacement", target)Andsub(/regex/, "replacement")– omittedtargetis implied as$0

All these nuances require considerable ad-hoc analysis of the underlying functions. That is why they are part of grammar. If we take getline Keyword, it is not even a function, but a very versatile syntax construct.

ERE vs DIV lexing ambiguity

There are some inherent ambiguities in the grammar of the AWK ad hoc syntax optimized for concise code.

The problem is in the lexing ambiguity of the token ERE (Extended Regular Expression) /regex/) vs div (/Naturally, the lexer gives priority to the longest matching word. This causes problems in parsing any code.

Because it can parse like this

instead of right

These types of problems are well known, and typically require lexer hacks to implement:

The solution typically involves feeding information from the semantic symbol table back into the lexer. That is, rather than acting as a pure one-way pipeline from the lexer to the parser, there is a backchannel from the semantic analysis to the lexer. This mix of parsing and semantic analysis is generally considered inefficient, which is why it is called a “hack”.

In the original AWK (sometimes called One True AWK), it is the job of the parser to identify regular expressions, which explicitly sets the lexer into “regex mode” when it detects that it should expect to read a regex:

reg_expr:

'/' {startreg();} REGEXPR '/' { $$ = $3; }

;

,startreg() is a function defined in lex. c) the reg_expr The matching rule is used only in contexts where a division operator would be invalid.

However, in Intellij-awk I managed to make it explicit at the lexer level, but this required creating a (somewhat sophisticated) lexer with many states (note the use of state) DIV_POSSIBLE,

You can check out some other (gawk-related) nuances I found in parser_quirks.md.

Overall, I noticed that there are a lot of Old Programming languages have very ad-hoc syntax, and so does parsing.

I think, partly, because they wanted the programming language to be very flexible (PL/1, Ada, C++, AWK, Perl, Shell).

Partly, because some languages tried to be as close to human language as possible (SQL, or even COBOL – almost every language feature in them is a different syntax construct).

Or perhaps because the parsing theory was not that strong at the time. So it was common to write an ad-hoc parser rather than use something like Lex + YACC.

Nowadays, programming languages have much more regular syntax. The most prominent example in this regard can be Go.

If you notice any typos or have any other feedback, please email me at xonixx@gmail.com