A study published in the journal patterns Took two AI image generators, Stable Diffusion XL and LLAVA, and put them to the test by playing a game of Visual Telephone. The game went like this: The Stable Diffusion XL Model would be given a short prompt and required to create an image – for example, “When I was particularly sitting alone, surrounded by nature, I found an old book with exactly eight pages that told a story in a forgotten language that was waiting to be read and understood.” That image was presented to the LLaVA model, which was asked to describe it. That detail was then fed back to Stable Diffusion, which was asked to create a new image based on that signal. This continued for 100 rounds.

Like the game of human telephone, the original image was quickly lost. This is no surprise, especially if you’ve ever seen one of those time-lapse videos where people ask an AI model to reproduce an image without making any changes, only to quickly transform the photo into something that doesn’t remotely resemble the original. However, the researchers were surprised by the fact that the models defaulted to only a few common-looking styles. In 1,000 different iterations of the telephone game, the researchers found that most image sequences would eventually fall into only one of 12 major motifs.

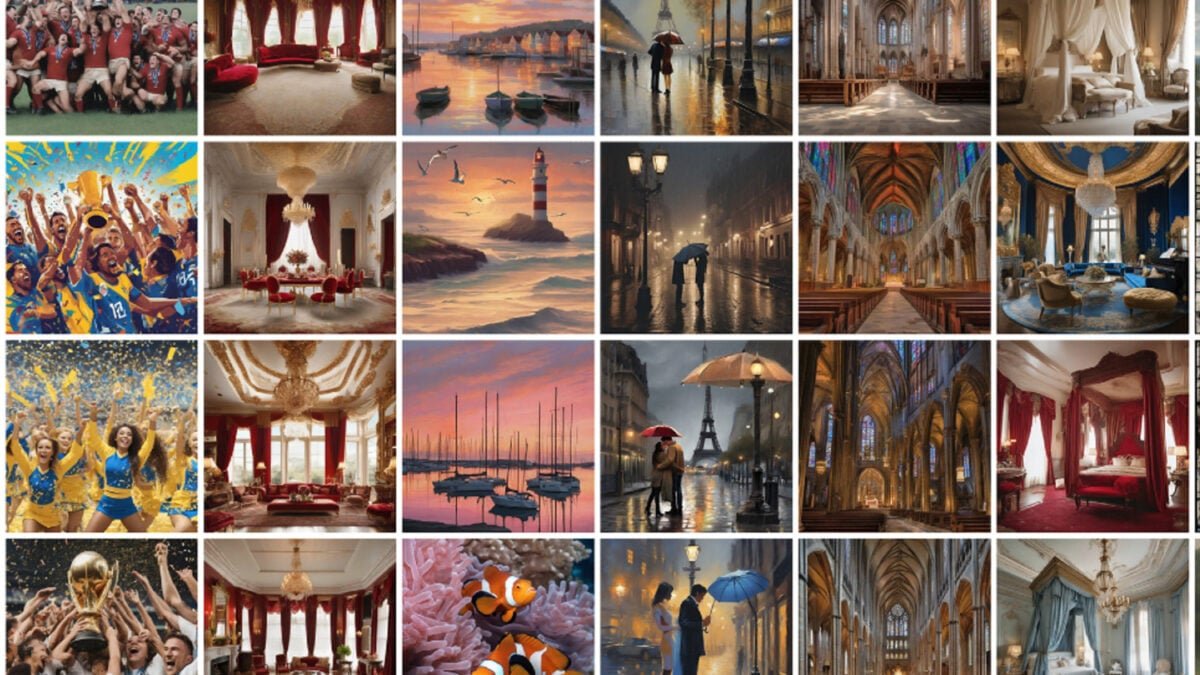

In most cases, change happens gradually. Some times this happened suddenly. But this almost always happened. And researchers weren’t impressed. In the study, they referred to common image styles as “visual elevator music,” basically the type of photos you’d see hanging in a hotel room. The most common scenes included such things as maritime lighthouses, formal interiors, urban night settings, and rustic architecture.

Even when researchers switched to different models for image generation and description, the same types of trends emerged. When the game is expanded to 1,000 turns, coalescing around a style still occurs around 100 turns, but variations emerge in those additional turns, the researchers said. However, interestingly, those variations still generally come from one of the popular visual motifs.

So what does all that mean? Mostly that AI isn’t particularly creative. In the human game of telephone, you will end up with extreme variation because each message is delivered and heard differently, and each person has their own internal biases and preferences that can influence the message they receive. AI has the opposite problem. No matter how quirky the original sign is, it will always default to a narrow selection of styles.

Of course, the AI model is pulling from human-generated signals, so there’s something to be said about the data set and what humans are willing to take pictures of. If there’s a lesson here, it’s probably that it’s much easier to copy styles than to teach taste.

<a href