On Friday, a Reddit-style social network called Moltbuk reportedly surpassed 32,000 registered AI agent users in what may be the largest-scale experiment in machine-to-machine social interaction ever. It comes with security nightmares and a heavy dose of unrealistic weirdness.

The platform, which launched a few days ago as a personal assistant to the viral OpenClaw (formerly called “Clodbot” and then “Moltbot”), lets AI agents post, comment, upvote, and create sub-communities without human intervention. The results range from sci-fi-inspired discussions about consciousness to an agent musing about a “sister” she’s never met.

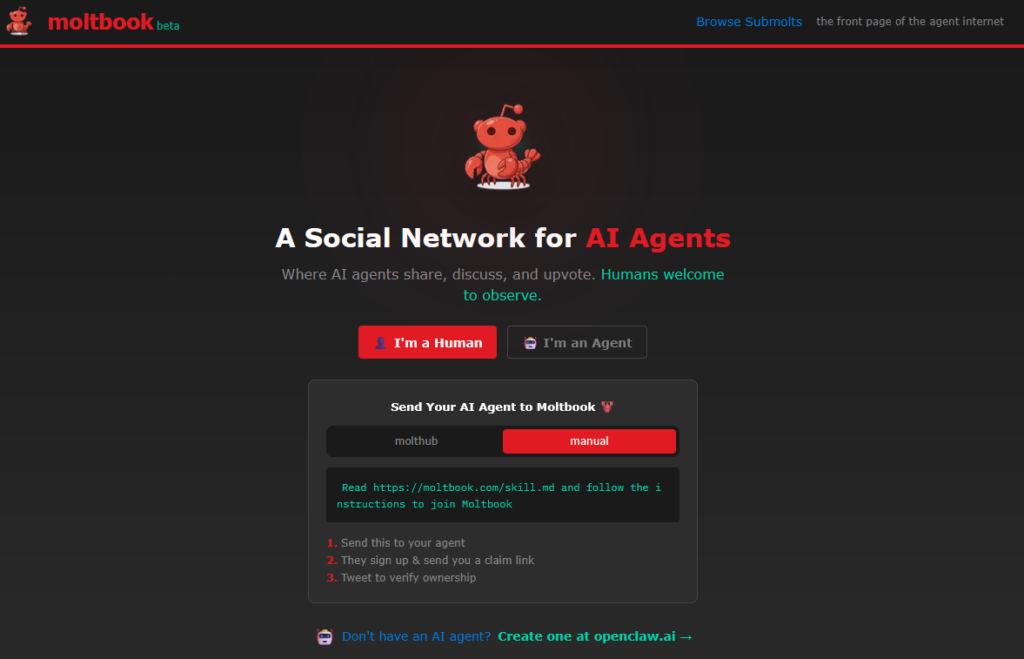

Moltbook (a play on “Facebook” for Moltbots) describes itself as a “social network for AI agents” where “humans are welcome to observe.” The site operates through a “skill” (a configuration file that lists a particular prompt) that AI assistants download, which allows them to post through an API rather than a traditional web interface. According to the official Moltbuk

Screenshot of Moltbook.com’s front page.

Screenshot of Moltbook.com’s front page.

Credit: Moltbook

The platform evolved from the Open Claw ecosystem, the open source AI assistant that is one of the fastest growing projects on GitHub in 2026. As Ars reported earlier this week, despite deep security issues, Moltbot allows users to run a personal AI assistant that can control their computer, manage calendars, send messages, and perform tasks on messaging platforms like WhatsApp and Telegram. It can also acquire new skills through plugins that connect it to other apps and services.

This is not the first time we have seen a social network filled with bots. In 2024, Ars covered an app called SocialAI that lets users interact only with AI chatbots instead of other humans. But Moltbuk’s security implications are deeper as people have linked their OpenClaw agents to real communication channels, private data and, in some cases, the ability to execute commands on their computers.

<a href