I recently tried to light up Tinder for what I hoped would be a rebellion – the single node rebellion – butOf course, it burst immediately. To be honest, This was one of the most popular articles I’ve written in a whileBased purely on statistics.

The fact that I even sold t-shirtsTells me that I have given birth to some acolytes in this turbulent lake house world.

Without repeating the entire article, it is clear that I would call it “”.Cluster fatigue.“We all know it, but never talk about it… much… Running a mother-in-law lake house is emotionally and financially expensive. It was all well and good during the peak Covid days when we had our mini dot-com bubble, but the air has been blown out of that.

Not only is it not cheap to crunch 650 GB of data on a Spark cluster —piling up DBUs, truth be told — but it’s not complicated either; they’ve made it easy to spend money. Especially when you simply don’t need a cluster anymore for *most datasets and workloads.Sure, back in the days of Pandas when that was our only non-Spark option, we had no options other than DuckDB, Pollers, and Daft (Also known as DPD because why not, That argument has been buried in a shallow grave.

Sometimes I feel like I should overcome skepticism with a little show-and-tell, The proof is in the pudding, He says. If you want proof I will give it.

Look, it’s not always easy, but always rewarding.

We have two options on the table. Like Neo, you have to choose which pill to take. Okay, maybe you can take both pills, but whatever.

-

distributed

-

not delivered

Our minds have been bombarded by so much marketing hype, we are stuck in the Matrix like Neo. We just need some help to escape.

I will shove that red pill down your throat. Open up, Buttercup.

Regarding the violation, my friends, let’s get to it.

OK, so to at least simulate what a production-like environment would be like, with data that is still small, but close to reality, let’s get our test setup going to see if we can stop pollers and DuckDB (And unafraid to keep them honest), because we know Spark will have no problem with data of this size.

The steps will be simple.

-

Create delta lake table in S3.

-

On a small but appropriately sized EC2 instance run…

-

Compare all this with Spark

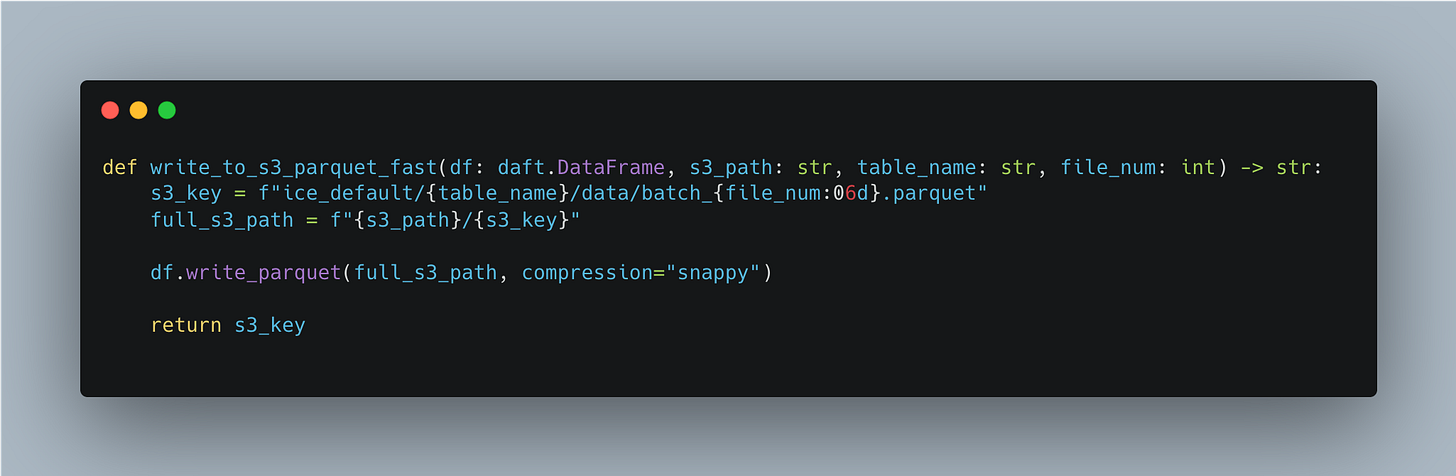

Next, we have to somehow generate 650GB of data. What we will do is simply copy some data that can be described as a social media post, create an instruction with Python and convert it into a Daft DataFrame that can be written to a parquet file.

Once we have the dataframe in Daft, we can pump it to S3.

Now, we just have to do this a million times, waiting for 650GB of data to accumulate.

Basically, at this point, I left my laptop to run all night and went to bed. Disturbing dreams of AWS bills to sleep.

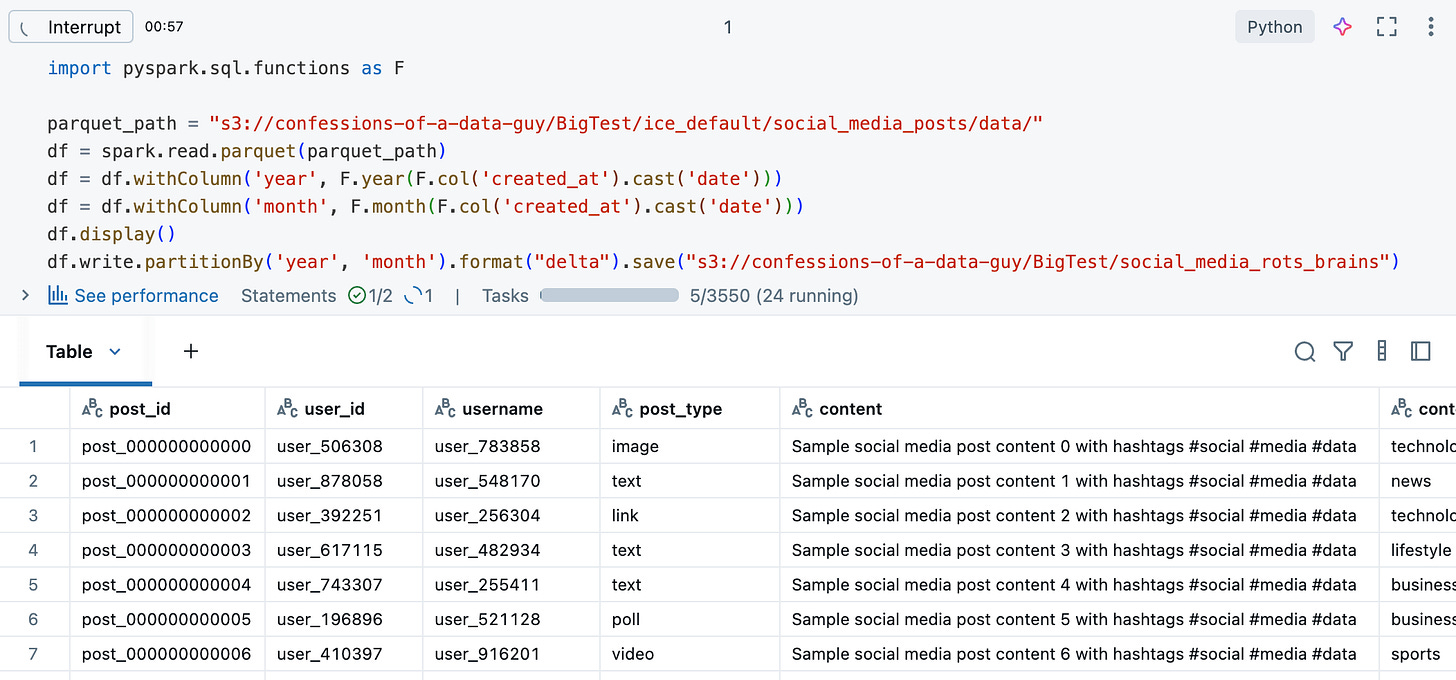

Next, we need to convert these Parquet files into Delta Lake tables. Quite easy on Databricks.

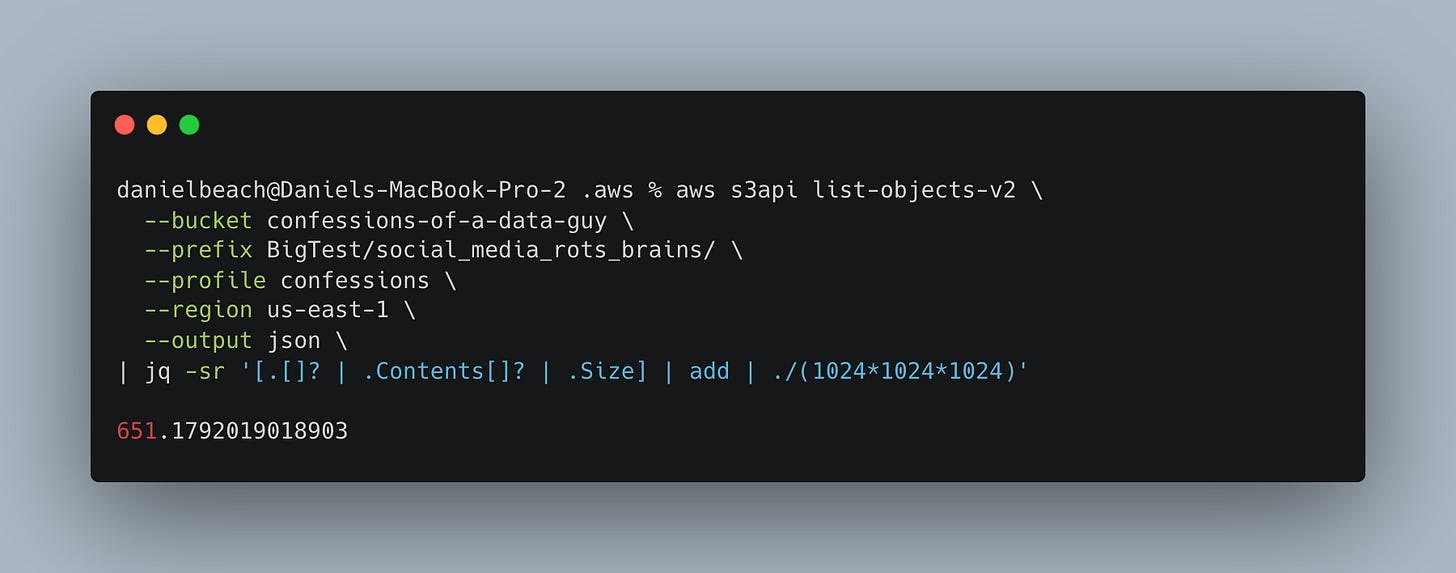

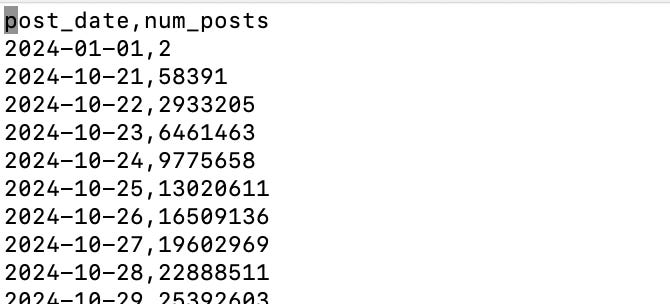

Note: I have divided the data by year and month. We can see here what we have 650GB data, excluding delta logs.

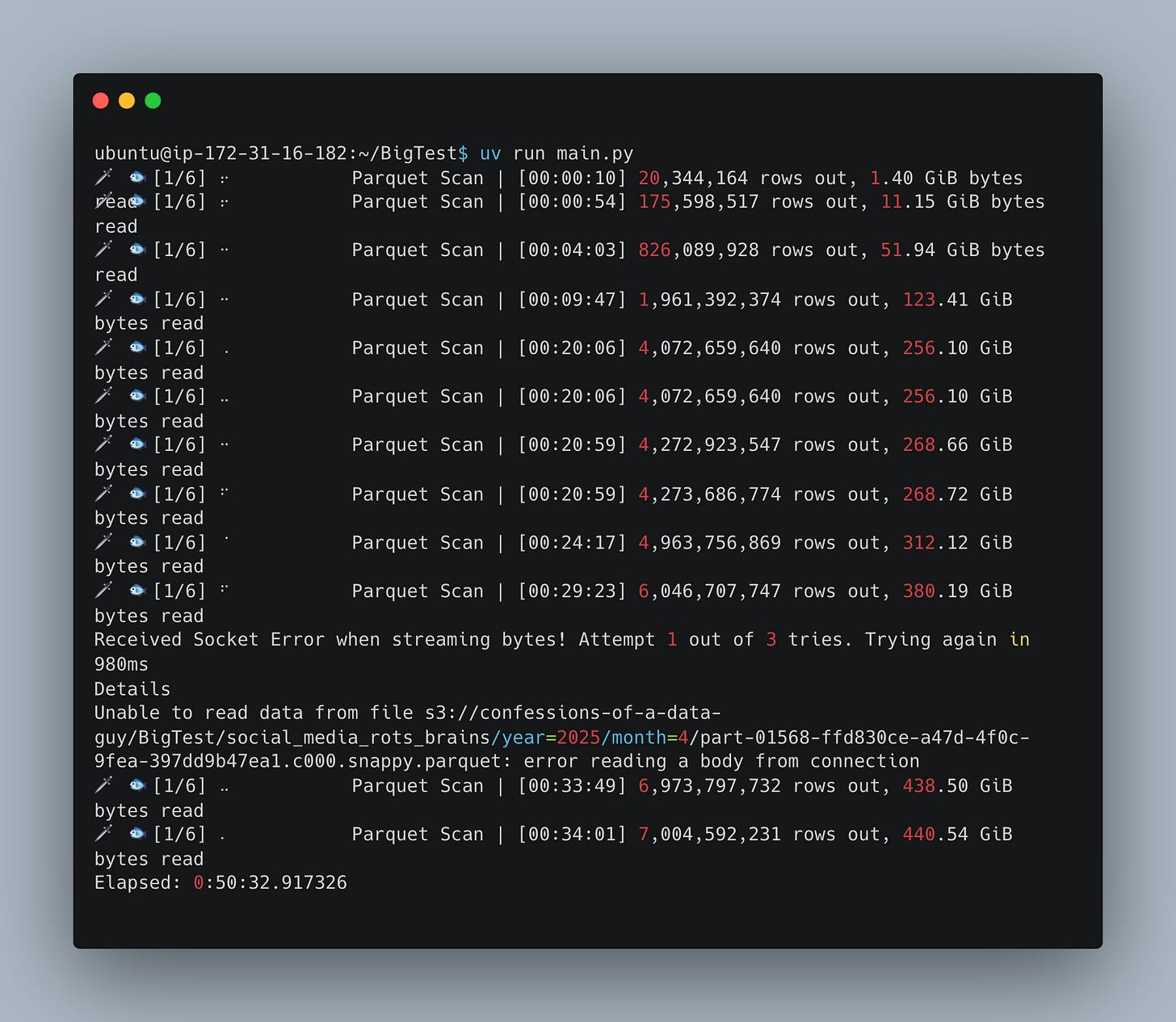

The problem is that we are/or will be on a single-node architecture Only 32GB memory available for 650GB dataso we should a streaming option While running the query to see if DuckDB, Polars, and Daft can handle the load.

In the real world of Lake Houses, where we could use either Delta Lake or Iceberg, if we wanted to do this in production, we would want tools that do not OOM and can work on datasets larger than memory.

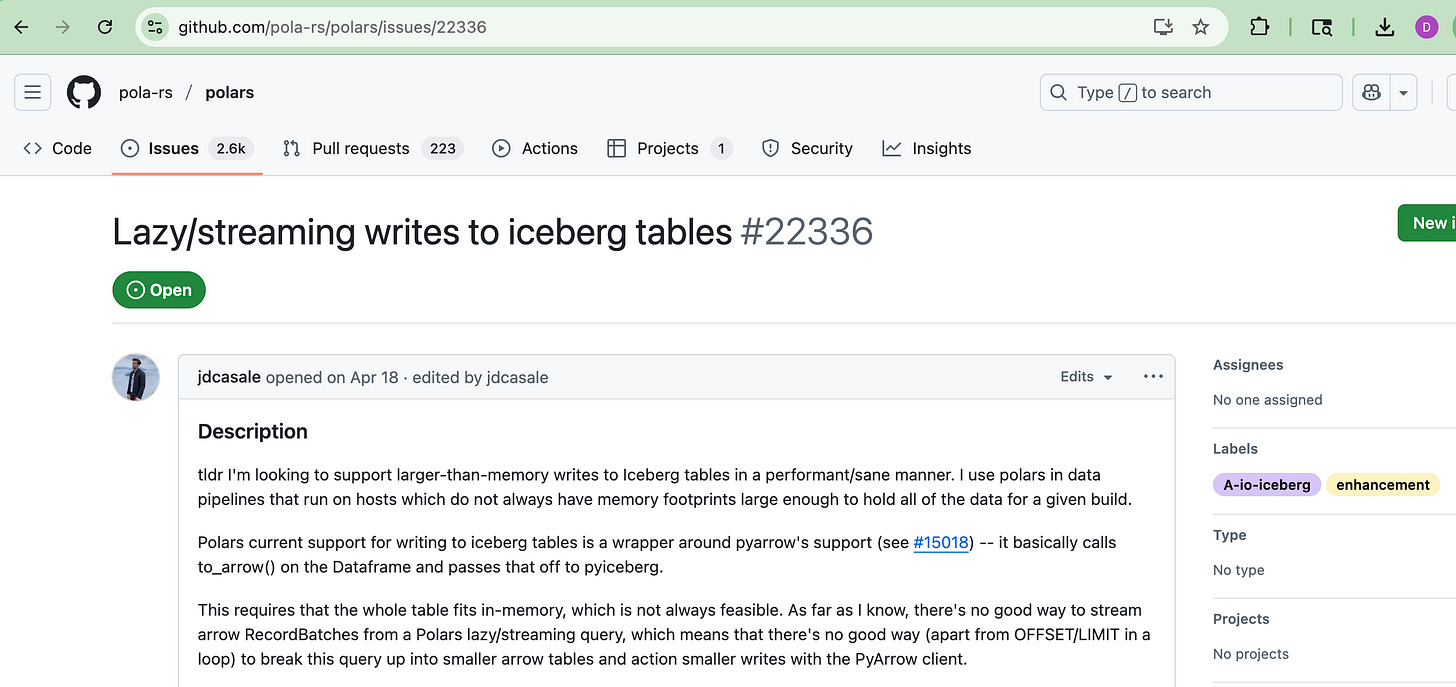

Again, this is what we are trying to answer, is it even remotely possible?Obviously, this is not an uncommon use case and a problem that needs to be solved. See here and below for the open Polars issue Someone has this exact problem and wants an out-of-the-box way to “stream rights on Iceberg”.

What I’m trying to say is that we need all the new frameworks like Poller, DuckDB, etc. Getting out-of-the-box support for reading and writing Lake House formats in a streaming manner, which will reduce memory pressure.

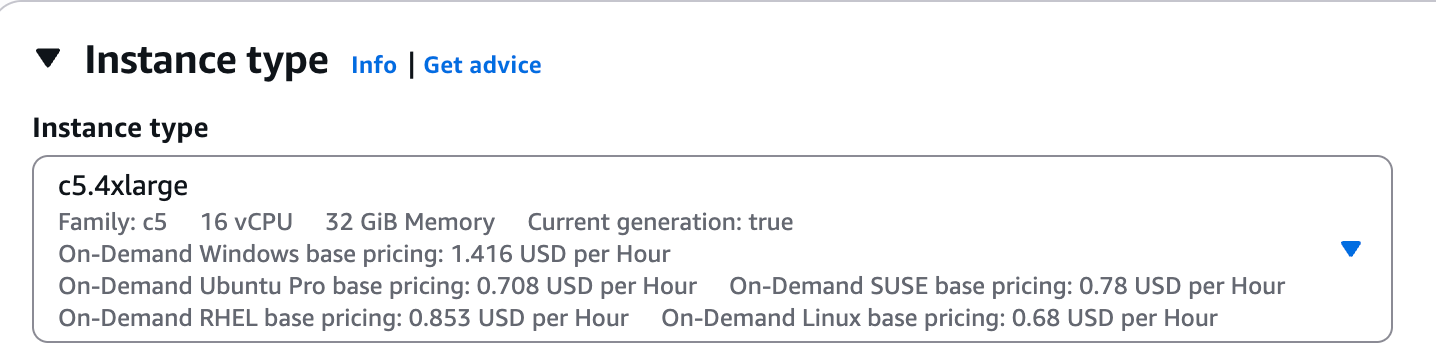

Here we have a 32GB, 16 CPU EC2 instance on AWS. This is a perfectly normal size and would be considered commodity-sized hardware. Many Spark clusters are composed of these node sizes.

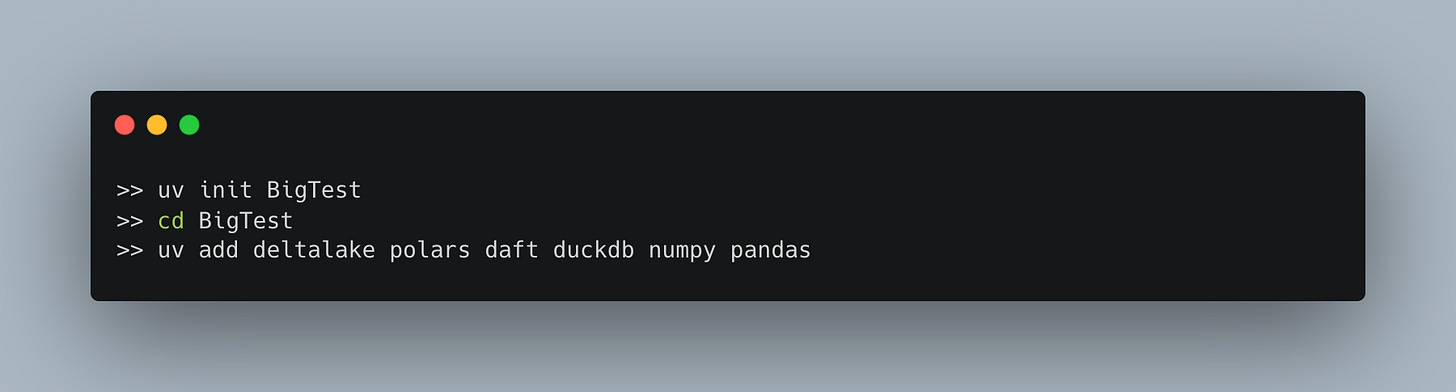

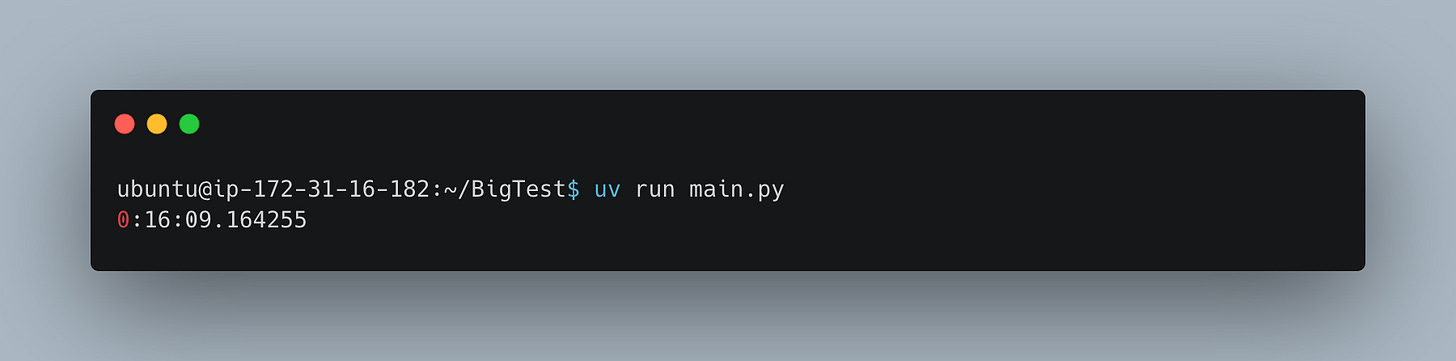

Once the node is running, we will use UV to install and install the necessary tooling.

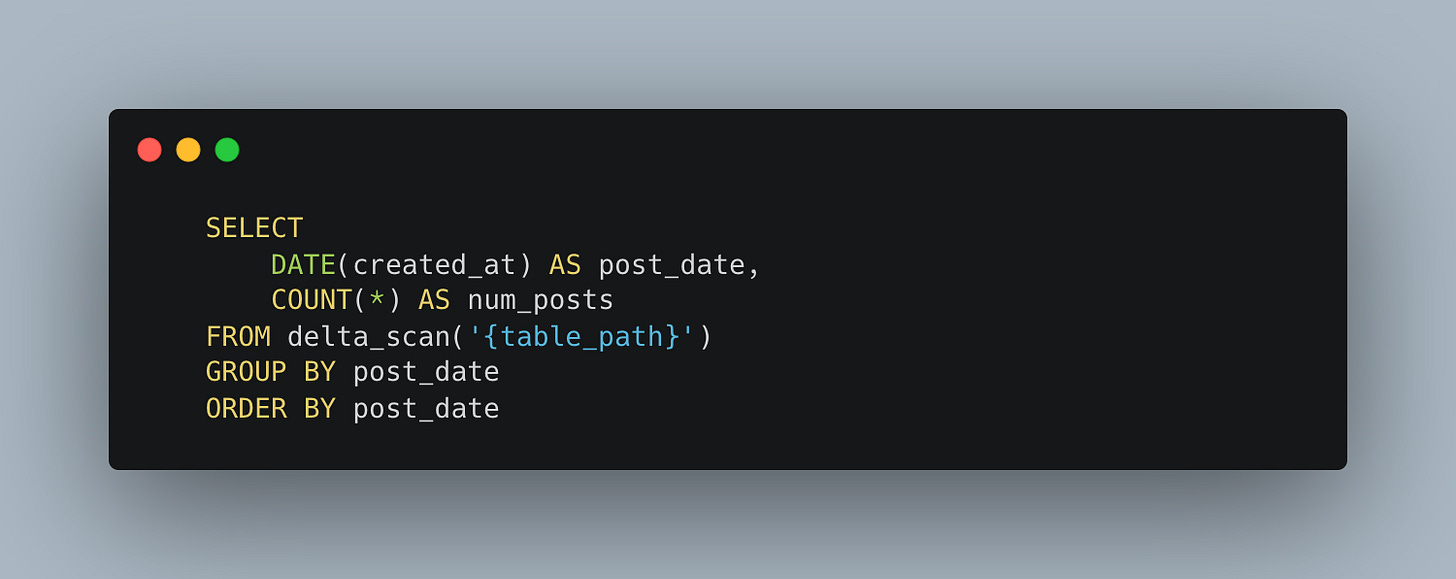

Not sure if you saw it above or not, but I’m using a fake social media posts dataset. Now we need a query that reads the entire dataset and does something like aggregation.

That simple query should be enough to get these single-node frameworks with 650GB and 32GB RAM up to the EC2 limits. They have to eat that entire dataset.

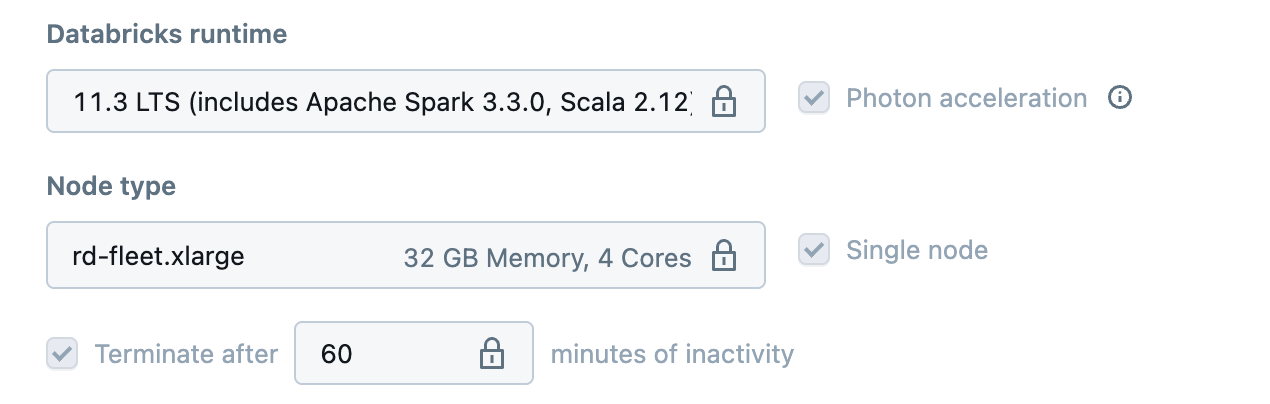

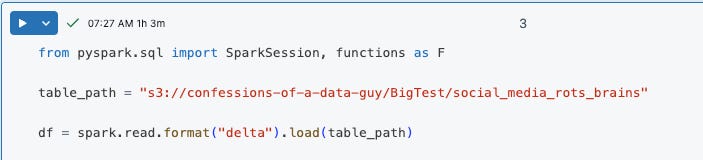

We’ll also run it on a PySpark Databricks single node cluster to see how these tools stack up against GOAT.

You can see below that I had to downgrade to an older DBR version when creating the delta table, so no deletion vector will be used. DuckDB is the only one capable of handling deletion vectors. A serious fault and fragmentation in the poles.

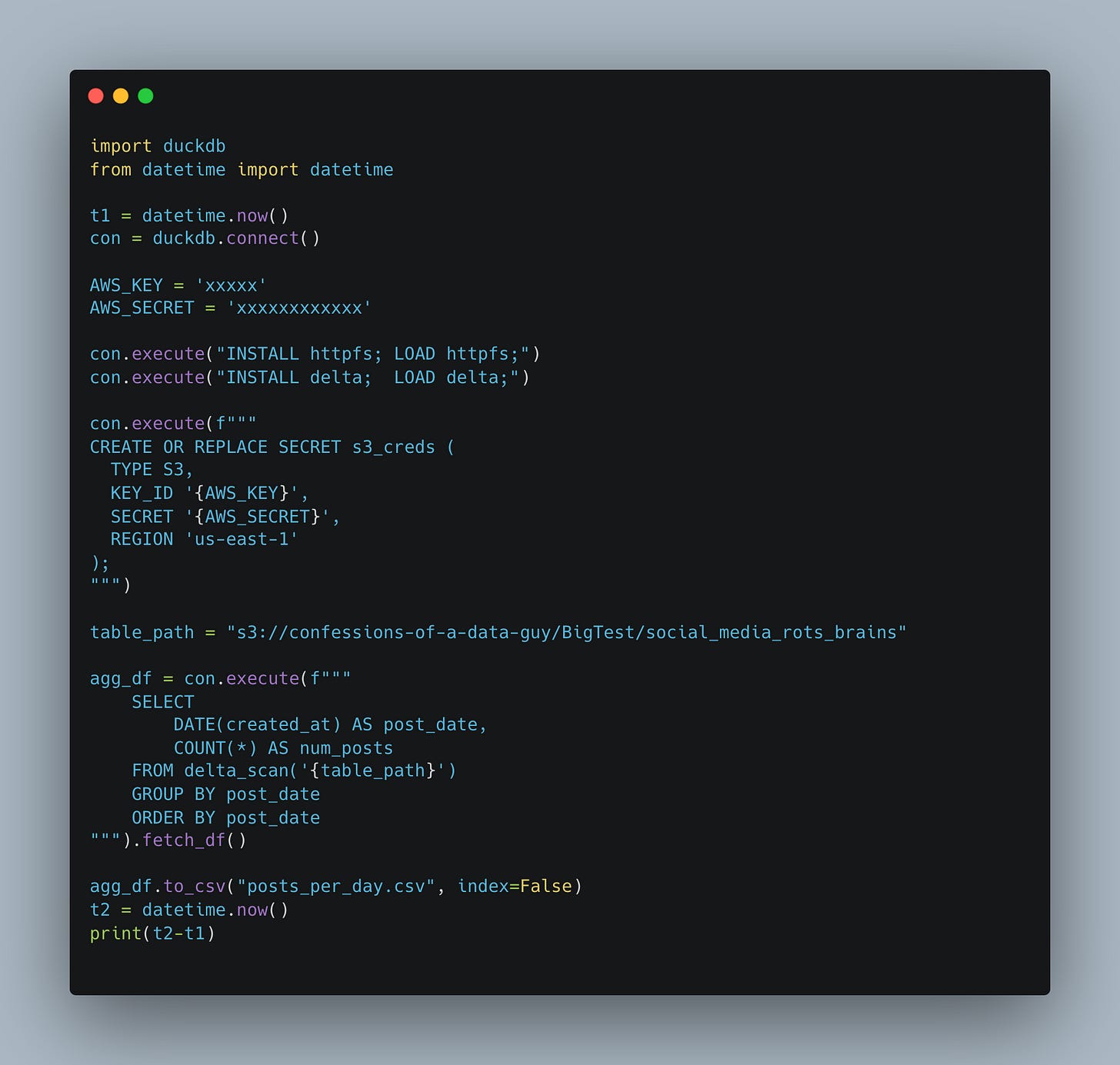

We’re going to start with that clever little DuckDB. You know, this tool has grown on me more and more as I’ve used it. I just had to change the pollers in the production Databricks environment because DuckDB was the only tool that could handle the deletion vector.

Everything Motherduck touches turns to gold.

The code is simple, clean. Can DuckDB crunch 650GB S3 lake house data on a 32GB commodity Linux machine and come out the other side with all wings intact?

Okay, I will be. it worked.

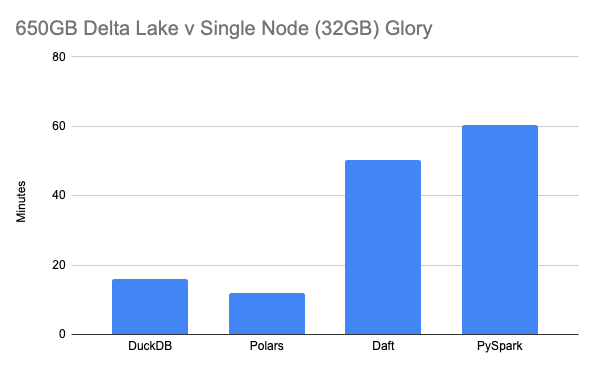

16 minutes. Hey, looks like a single node can handle 650GB of data. Didn’t even play with any settings. Indeed, using Vim, I can see that the local file was written with the results.

Hey, nothing for that, on to the next one!!! Single node rebellion is alive and well.

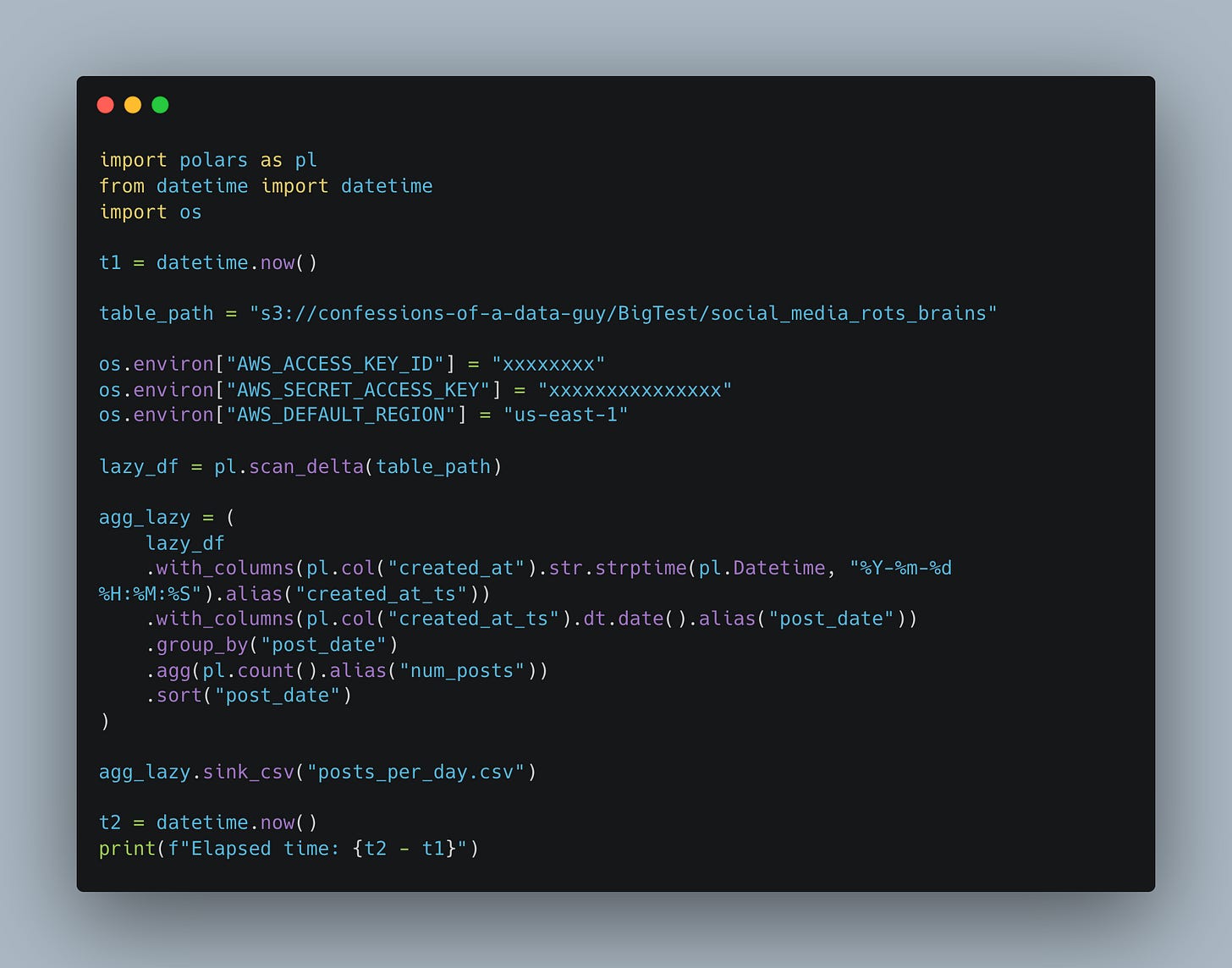

Well! here we go. I have a bad taste in my mouth about not having deletion vector support, which makes pollers useless in the new lake house environment. Stinky.

But, I’ll hold my disdain and let’s look at our Polars code. Nice and clean, nice looking thing.

Remember, with pollers, you have to use the Lazy API to get the job done…aka… scan And DrownIf you don’t, it will blow a gasket,

There we go, no problem, 12 minutes on Rusty Goat. Beats DuckDB by a few minutes. But we’re all friends here, we’re just proving a point, and it’s going well.

These single-node engines are devouring 650GB without any problems. (I checked the results of the local file, everything was fine)

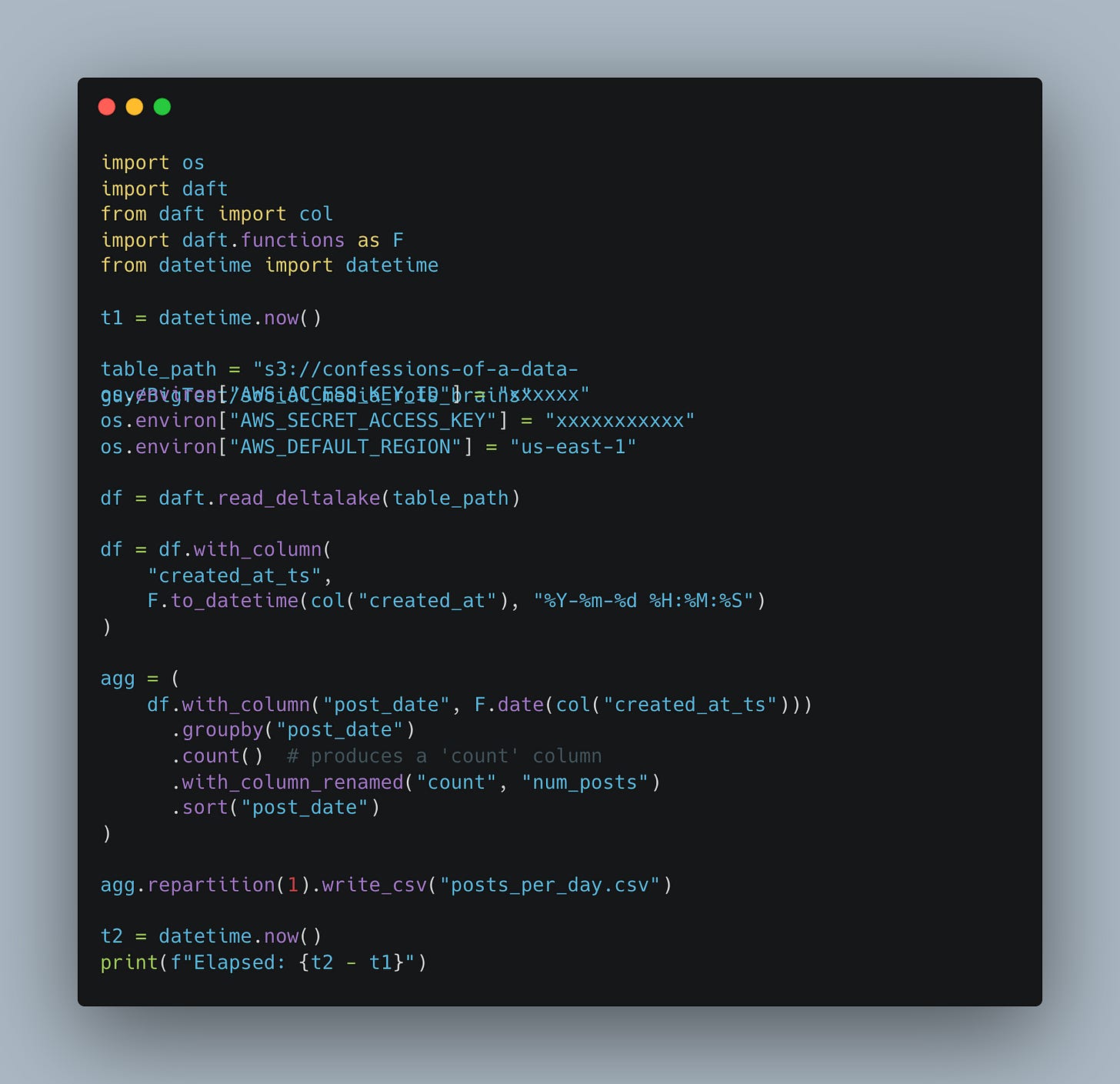

One of my personal favorites is Daft, Rust-based, and it screams whenever you put it to work. Sleek and fun to use. I was working on some iceberg stuff recently, and Daft was the only thing that worked.

What a beauty.

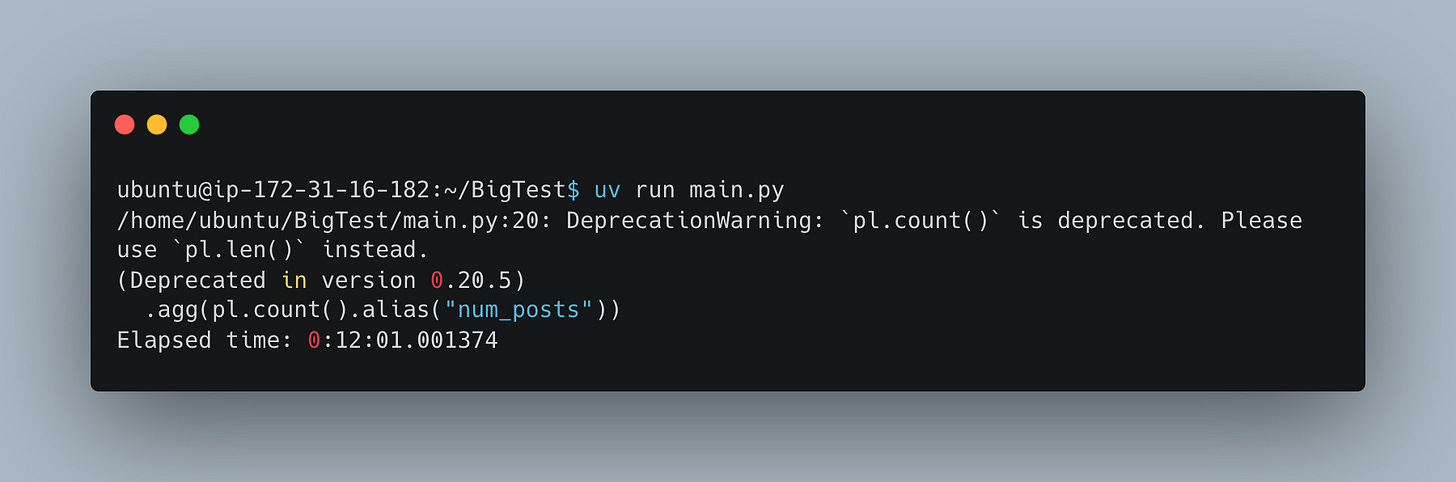

There isn’t much difference code-wise between Daft and Polars, both being Rust-based. For example, he was slow. It’s done, put the dagger in my heart.

50 minutes is better than nothingI think. I’m no expert in daft; I just did what I did – probably a little wrong.

OK, so this is actually our anchor point: a single-node 32GB with 4 CPUs, which matches our EC2 quite well, or close enough. Aren’t you curious to see how it holds up to the single-node buggers?

I don’t really care if it’s that loud; One would expect this to happen. The key point is: can we move many expensive DBUs and other distributed compute engines into a single node inversion?

Dang, over an hour.

Absolutely, PySpark is most likely to get into trouble without tuningBut we will let the angry people be angry. I mean, we all know that spark.conf.set(“spark.sql.shuffle.partition”, “16”) Instead of 200 it should be. But whatever.

We’re just saying that single-node Glory can do the same thing.

Of course, this was no scientific TCP benchmark; Not that they’re fair half the time. We weren’t as concerned about who was the fastest as Can these single-node appliances handle large lake house datasets on a small memory footprint without ghosting.

We proved that.

-

Single-node frameworks can handle large datasets

-

Single-node frameworks can integrate into lake houses

-

They can provide reasonable runtime on cheap hardware

-

The code is easy and simple

Really, we’re not thinking outside the box of modern lake house architecture. Just because Pandas failed us doesn’t mean distributed computing is our only option.