Chris Lattner created some of the most influential programming languages and compiled technologies of the past 20 years. He is the creator of LLVM, used by languages like Swift, Rust, and C++, created the Swift programming language, worked on TensorFlow, and now works on the Mojo programming language. In this conversation, they cover the origin story of LLVM and how Chris managed to convince Apple to move all major Apple dev tools over to support this new technology, how Chris created Swift at Apple, including how they worked on this new language in secrecy for a year and a half, and why Mojo is a language Chris expects to help build efficient AI programs easier and faster, how Chris uses AI tools, and what productivity improvements he sees as a very experienced programmer, and many more. If you’d like to understand how a truly standout software engineer like Chris thinks and gets things done, and how he’s designing a language that could be a very important part of AI engineering, then this episode is for you.

Chris Lattner encourages his team to use AI coding tools like Claude Code and Cursor. For experienced programmers like himself, these tools provide about 10% productivity gains, mainly by handling mechanical rewrites and reducing tedious work, which increases both productivity and coding enjoyment.

However, the impact varies significantly by use case. For PMs and prototypers building wireframes, AI tools are transformative—enabling 10x productivity improvements on tasks that might not otherwise get done. But for production coding, results are mixed. Sometimes AI agents spend excessive time and tokens on problems a human could solve faster directly.

His key concern: programmers must keep their brains engaged. AI should be a “human assist, not a human replacement.” For production applications, developers need to review code, understand architecture, and maintain deep system knowledge. What he cares about most is keeping production architecture clean and well-curated—it doesn’t need to be perfect, but it needs human oversight. AI coding tools can go crazy, duplicating code in different places, which creates maintenance nightmares when you update two out of three instances and introduce bugs. “Vibe coding” (letting AI handle everything) is risky—not just for jobs, but because it makes future architectural changes nearly impossible when no one understands how the system works. As Chris puts it: “The tools are amazing, but they still need adult supervision.” Keeping humans in the loop is essential for security, performance, and long-term maintainability.

Chris Lattner’s team hires two types of people: super-specialized experts (compiler nerds, GPU programmers with 10+ years of experience) and people fresh out of school. He finds early-career hires particularly exciting because “they haven’t learned all the bad things yet.”

For early-career candidates, he looks for intellectual curiosity and hunger—people who haven’t given up to “AI will do everything for me.” He wants fearless individuals willing to tackle things that sound terrifying or “impossible and doomed to failure” with a “how hard can it be? Let’s figure it out” attitude. Hard work and persistence are essential in the rapidly changing AI space where many people freeze up instead of adapting.

He particularly values open source contributions, which he considers the best way to prove you can write code and work with a team—a huge part of real software engineering. Internships and hands-on experience also matter. During conversations, he can tell when candidates are genuinely excited about what they do versus just “performatively going through the motions.”

His interview philosophy emphasizes letting candidates use their native tools, including AI coding assistants for mechanical tasks. Making people code on whiteboards without their normal tools would be “very strange” today. He also recognizes that nervousness affects performance, so creating a comfortable environment matters.

Chris Lattner argues that with AI making code writing easier than ever, the focus should shift to code readability, not optimizing for LLMs. Code has always been read more often than it’s written, and that hasn’t changed.

For him, great programming languages need two things in balance: expressivity (can you express the full power of the hardware?) and readability (can you understand the code and build scalable abstractions?). JavaScript can’t write efficient GPU kernels because it lacks expressivity. Assembly code has full expressivity but no readability. The sweet spot is the intersection of both.

This is why Mojo embraces Python’s syntax—it’s widely known and easy to read—while replacing Python’s entire implementation to unlock full hardware performance. He believes LLMs will continue improving at handling any language quirks, and they’re already an amazing way to learn new languages.

His advice for making code LLM-friendly? Make it human-friendly first. Better error messages for humans are better for agents too. The most important thing for LLM-based coding is having massive amounts of open source code—Modular’s repo has 700,000 lines of Mojo code, complete with full history, giving LLMs a huge dataset to learn from.

On why compilers are cool: unlike other university classes where you “build a thing, turn it in, throw it away,” compiler courses teach iterative development. You build a lexer, then a parser on top of it, then a type checker, and keep building higher. If you make mistakes, you must go back and fix them—mirroring real software development. Today it’s easier than ever to learn compilers through resources like LLVM’s Kaleidoscope Tutorial and the Rust community. While not everyone needs to become a compiler engineer, the field deserves more credit and offers great career opportunities.

He also believes we’ve reached a point where we’re operating at a new higher level of abstraction, as other authors have noted as well, with software engineers acting as supervisors of AI agents: reviewing, editing, and debugging AI-generated code. He also points out that for a long time, syntax and the high rigidity of programming had become barriers to entering the CS field, and GenAI is now democratizing access. It’s true that we’re seeing many more people doing some form of programming.

Now, this lower barrier to entry introduces new teaching approaches for more diverse students — I’m thinking about those non-computing students interested in creating apps or websites for fun, for example, and teaching them the architectural implications of those websites. Clearly, within the CS major, the increasingly common opinion is that AI is a productivity booster for experts, and the results for novices are still unclear. As I’ve said several times in this newsletter, it’s essential to understand what we’re doing under the hood for various reasons, such as social factors, performance, and security.

Juho reminds us that companies will hire students who know more about AI, but not if they over-rely on it. One question that comes up is how we can motivate students to learn programming if there’s a tool that does it for them and banning it simply isn’t an option.

Finally, he talks about a challenge that I’m not fully convinced is 100% true — based on what several senior engineers have been saying over the past few months — but it does make sense: seniors are slowing down junior hiring because now they can use AI to handle tasks they would have previously delegated. He says this is a danger to workforce diversity.

As always, Juho makes good points. I recommend following him on LinkedIn and on Google Scholar so you don’t miss his upcoming papers — and his previous ones, which are closely related to all of this.

Historically, to get a job in the tech industry, you needed a CS degree. CS is not considered to be an easy major. It is often multiple years of intense training across a variety of technical and mathematical topics. Now, what has made getting CS education more complicated, just like we’ve seen in so many other parts of the industry, is the rise of AI agents. As a student, how do you learn critical thinking and problem solving when you’re just a few keystrokes away from talking to an AI agent who will not only answer the question for you, but also give you a very detailed rundown of why things work the way they do? And so, taking a step back and looking even broader, is there even value in the future for a CS education when, like many people predict, an AI agent can increasingly be more capable at building very complex systems with very little technical know-how needed by the human in the loop?

I loved this conversation between Kirupa Chinnathambi and Elisa Cundiff.

What struck me most was:

Kirupa on entering the teaching profession (similar feelings here):

Teaching allows us to share real-world insights that students can truly benefit from. Kirupa believes that if we can simplify complicated topics and make them understandable, it proves we genuinely understand the subject—we know what matters, what doesn’t, and what can wait until day five or day ten, rather than overwhelming students with everything on day one. For him, teaching helps him stay relevant as part of his work.

Elisa Cundiff on access to information:

Current paradox: while students have better educational tools than ever before, they face the problem of not knowing what to learn or which resources to use among thousands of available options. Having access doesn’t guarantee direction.

Kirupa on struggle and sequential learning:

In the past, the scarcity of resources forced sequential and gradual learning (starting with basic HTML, then inline colors, understanding hex codes…). This process of “noodling” or struggling with basic problems generated a deep understanding of why things work. Today, with so many resources available, students never spend enough time struggling with the most basic problems, which can prevent them from developing a true appreciation of the fundamentals.

Elisa Cundiff on fundamentals in the ChatGPT era:

After the initial panic about chatbots (is it the new calculator/Wikipedia?), the emerging consensus among educators is that students must learn the fundamentals first. Without understanding the underlying concepts, they can’t audit AI code for correctness, security, maintainability, or efficiency, nor debug it. Once they master the fundamentals, then they should be introduced to AI tools to speed up their work before graduating.

Kirupa on critical thinking through struggle:

One of the best ways to develop critical thinking is through making mistakes and learning from them—breaking down questions into sub-questions and figuring out answers through trial and error. In the past, there was no Plan B when learning programming; you had to struggle through problems. If no one on Yahoo, Lycos, or Excite had the answer, you figured it out yourself through experimentation. This struggle created retention because you spent so much time learning what didn’t work before discovering what did. Today, everyone has a Plan B just five seconds away: copy-paste into a chatbot that provides a 90-99% accurate answer with an explanation. Students aren’t exploring on their own anymore—they’re just reading from the screen, memorizing rather than experiencing the struggle of true learning.

Elisa Cundiff on the illusion of learning:

Students face the illusion of having accomplished something. In her intro Python course (which counts as arts and humanities), 25% of the grade comes from writing—where she first saw widespread AI-generated essay cheating. When she talked to 35 students last fall, most acknowledged they weren’t getting anything from it and recognized the essays weren’t theirs. They kept saying “it’s too easy”—in moments of panic, they think turning something in is better than nothing. Cheating used to be harder. Most students recognize the speed-accuracy tradeoff isn’t resulting in cognitive development.

Elisa on fundamentals and critical thinking:

No matter what the future holds, students need to be critical thinkers. Learning basic programming concepts are little puzzles that help them learn to think. These can be made fun and are worth keeping—not only to build foundational understanding, but to develop critical thinking skills.

The conversation concludes with reflections on AI’s impact on education, comparing it to past disruptions like the internet, Wikipedia, and MOOCs. Elisa emphasizes that the educator’s role as curator becomes even more important—providing structure, end goals, and curated learning environments in a world of overwhelming information. Derek Muller’s point is referenced: each generation predicted education’s destruction (internet in the 90s, Wikipedia in the 2000s, MOOCs), but education persists because students need structured, joyful guidance that answers the “why” and builds skills. Kirupa expresses uncertainty about his own teaching role’s future value, questioning whether what he teaches will meaningfully impact students when AI provides quick answers. Both acknowledge the unprecedented uncertainty students face and the challenge of preparing them for an unknowable future.

For me, being a good educator means being a good communicator. Storytelling is a big part of teaching. This week I was listening to Fernandisco, who is a legend in Spanish music radio. He’s currently a host/DJ on the music station Los 40 Classic and directs a music magazine show in prime time. He’s a tastemaker—one of those people who filter through the avalanche of available music to help us discover—or rediscover—all the great work being done.

Here’s what I learned from him in this episode:

On being a good communicator:

For me, every day is something very special because the person listening to you comes back to you because you are part of the emotion they’ve been building throughout their lives. And it’s a daily miracle. Radio isn’t something where every five days you need to do a good show. You have to do it every day because people deserve the best.

On the algorithm:

When you’re listening to me in the morning, you know that I am the algorithm—that’s the difference. The music I’m going to play for you, I know is good, and it’s music people want to hear explained by a storyteller.

This one by Stuart Russell and Peter Norvig is perhaps the go-to book when you want an overview of AI. Here it is in web format.

Chip Huyen’s books have received excellent reviews.

And for machine learning, I’d recommend this one—especially for reviewing the mathematical foundations behind it.

If you’re looking for something concise and enjoyable, ideal for entering the world of ML, I’d probably recommend Andriy Burkov’s book.

As for deep learning,this introduction has been well received in both academia and industry.

Following on from last week’s topic, Matt Pocock on how he uses Claude Code in his programming workflow.

Laurie Gale from the Raspberry Pi Foundation has been exploring the use of PRIMM alongside debugging. He’s been developing an amazing online tool/environment that almost forces learners to interact with code and suggest ideas, debug and solve the issues.

This is a very useful instructional resource with interactive exercises for getting novice students to practice Java and Python. The exercises in section 10 are especially interesting to me.

Enjoyed this technical conversation on autonomous driving on the Google DeepMind podcast a lot. It’s worth a listen!

Survey on how CS1 instructors perceive programming quality.

The Computer Research Association (CRA) is interested in the professional development of students in the field of computing. Since you are probably no longer a grad student, you can encourage your students to complete this survey. Any contribution provides valuable information for future students who are considering this amazing field of computing.

CCSCNE 2026 Submission Reminder: Conference website.

Call for Submissions: Innovations and Opportunities in Liberal Arts Computing Education (SIGCSE 2026).

Deadline Changed to Nov 16 AoE for the SIGAI Innovative AI Education Program.

ICER 2026 Call for Papers: Abstracts 20th Feb, Papers 27th Feb.

Register now for the next free ACM TechTalk, “A Look at AI Security with Mark Russinovich,” presented on Wednesday, December 3 at 1:00 PM ET/18:00 UTC by Mark Russinovich, CTO, Deputy CISO, and Technical Fellow for Microsoft Azure. Scott Hanselman, Vice President of Developer Community at Microsoft and member of the ACM Practitioner Board, will moderate the questions and answers session.

CSUN seeks tenure-track Assistant Professor in Computer Science with expertise in areas like Cloud Computing, Cybersecurity, AI, or related fields, to teach undergraduate/graduate courses and contribute to equitable student outcomes through teaching, research, and service.

This post from Josh Brake on the FDE concept was a good read. I agree with Josh that every challenge is an opportunity for innovation. Check out the interesting apps some educators are developing to create new ways for students to engage in new and meaningful learning opportunities. This mindset of a Forward Deployed Educator offers one of those fruitful directions.

I’m thinking here about the kind of interactive visualizations that can often be a very useful tool for helping students to build intuition about a concept, and enable them to engage in curious play. It’s interactive single-page web apps that help students to test their knowledge on assembly and machine language in my digital design and computer engineering class. It’s a custom multitasking simulator to help students visualize how different scheduling algorithms perform and impact the performance and context switching overhead in a real-time operating system. Now, instead of being frustrated with the limitations and constraints of existing tools that don’t do quite what you want or the insurmountable time investment required to build a small tool to illustrate a concept in a single lesson, you can get to a serviceable demo in only a few prompts.

Earlier this year, my friend Punya Mishra shared a Unit Circle Demo that he mocked up with GPT-o1 to show the connection between sines, cosines, and the unit circle. In a post a month later, he mused how even incorrect simulations can be used to help push students to be more curious and to develop healthy skepticism. Just yesterday, Lance Cummings wrote about the Writing Gym Tracker app that he built to help his students build their writing practices. In the before times, you’d have to live with the limitations or bugs in another developers app, bugging them to fix or add the features you want. Now you can just build and deploy your own with a few prompts.

This by OpenAI is a great read:

Even among top models, performance varies significantly by task.

Ethan Mollick raises a very true point this week:

Anyone who wants to use AI seriously for real work will need to assess it themselves.

I strongly agree with this. The author is Senén Barro, who teaches at the University of Santiago de Compostela (Spain):

I’ve mentioned before that since ChatGPT came out, my students barely use office hours anymore. That said, I hope that with these new tools designed specifically for them, they won’t stop coming to class. Either way, if they do stop coming, it’ll be more my fault than AI’s. These tools, at least for now, aren’t free from hallucinations, incorrect or poorly structured explanations, and while they’ve read everything, they haven’t experienced anything—which means they’re unaware of many aspects of the real world that go far beyond the world described in texts—what we might call the written world.

As I’ve mentioned before here and here:

Either I, as a professor, know how to offer more than these tools do, or my students are honestly wasting their time with me.

My co-advisor Michael wrote in the NYT about the shift from coding to supervising AI-generated code:

The essential skill is no longer simply writing programs but learning to read, understand, critique and improve them instead. The future of computer science education is to teach students how to master the indispensable skill of supervision.

Most education doesn’t yet emphasize the skills critical for programming supervision — which hinges on understanding the strengths and limitations of A.I. tools.

Changing what we teach means rethinking how we teach it. Educators are experimenting with ways to help students use A.I. as a learning partner rather than a shortcut.

The rise of generative A.I. should sharpen, not distract, our focus on what truly matters in computer science education: helping students develop the habits of mind that let them question, reason and apply judgment in a rapidly evolving field.

James Prather is presenting our work today in Koli! You can also find our paper in the ACM proceedings.

Excited to hear Binoy Ravindran from Virginia Tech in our next Monday’s CS graduate seminars. Binoy leads the Systems Software Research Group at VT. If you’re in Houston, it would be great if you could join us. Here is the link to the event if you’re interested in coming.

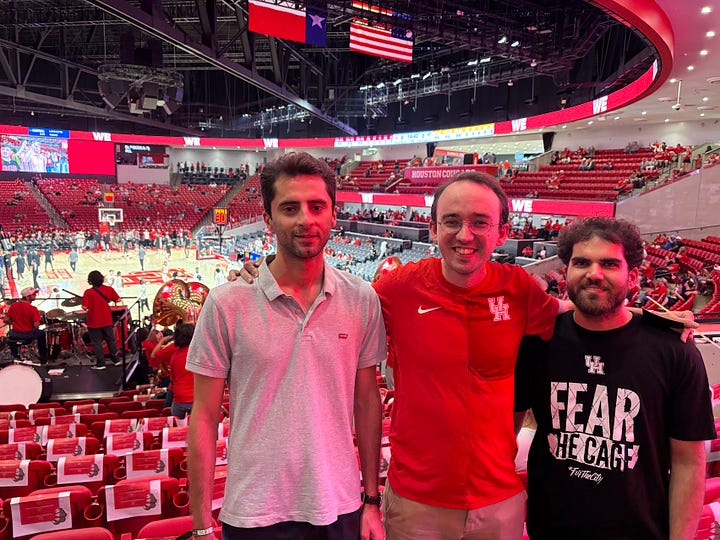

First NCAAB game in the US and it was AMAZING! Houston crushed Towson 65-48 with Kingston Flemings dropping 20 points for the MVP. That stadium energy was UNREAL. But the real MVP? Persian food before the game with the best company (protip: always go with real Iranians). Go Coogs!

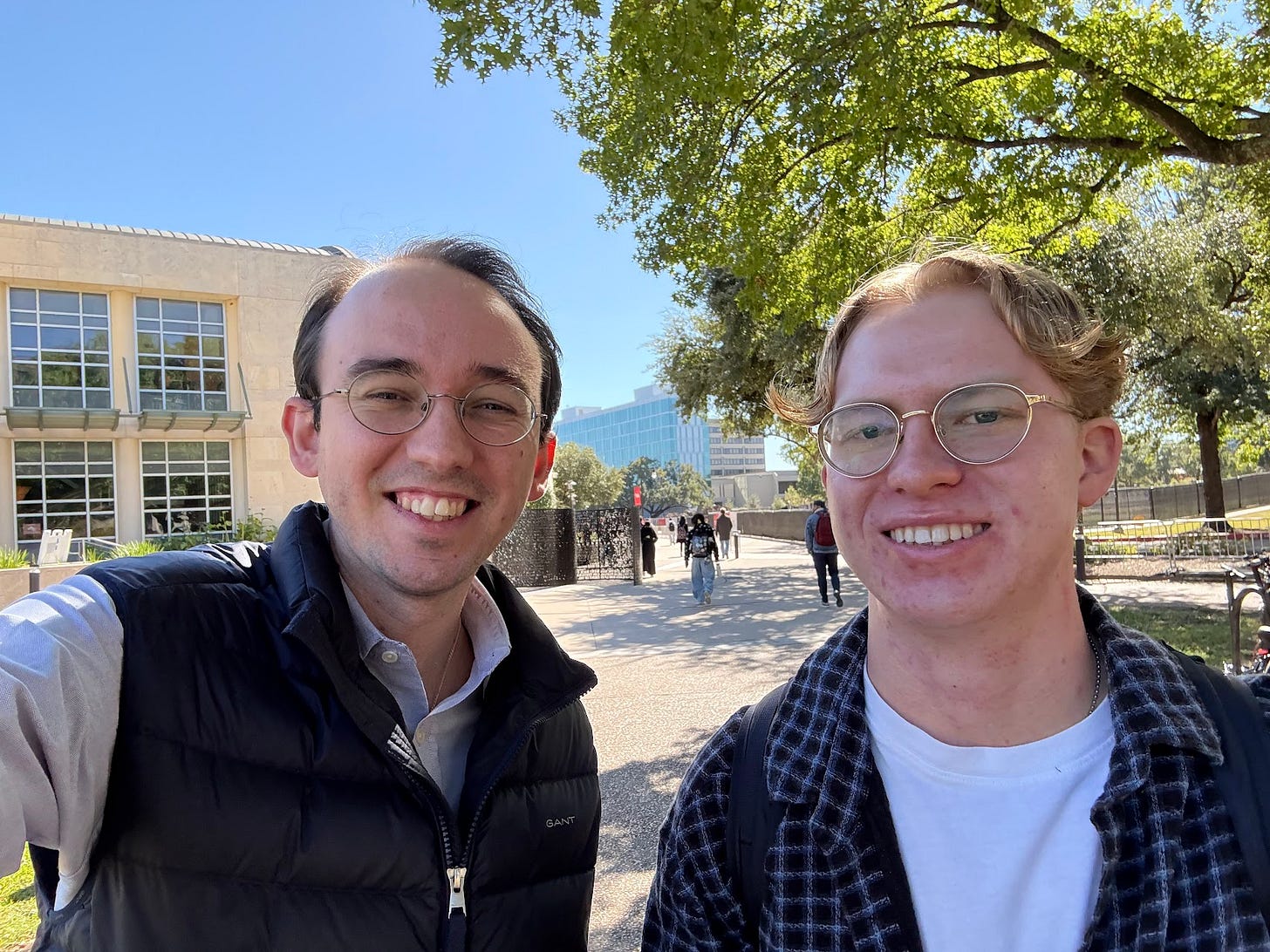

Nothing like a coffee and good conversation with Luke on campus. Friendships that started at the Newman and continue to grow.

Personal achievement: I passed my US driving test yesterday! I’m so happy about it.

This week I’ve been listening on repeat to Viva Suecia’s wonderful new album.

The most extraordinary thing in the world is an ordinary man and an ordinary woman and their ordinary children.

― G.K. Chesterton

That’s all for this week. Thank you for your time. I value your feedback, as well as your suggestions for future editions. I look forward to hearing from you in the comments.